Researchers from Meta and UNC-Chapel Hill Introduce Branch-Solve-Merge: A Revolutionary Program Enhancing Large Language Models’ Performance in Complex Language Tasks

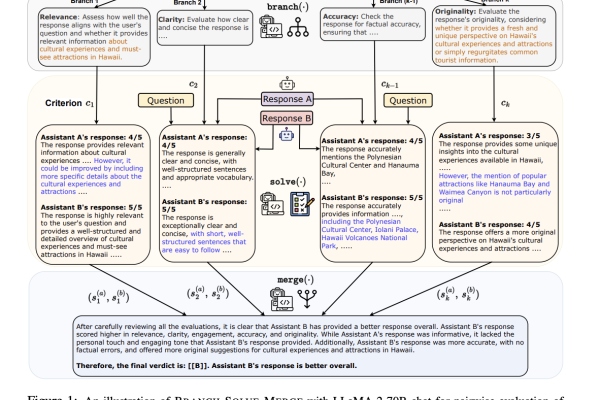

BRANCH-SOLVE-MERGE (BSM) is a program for enhancing Large Language Models (LLMs) in complex natural language tasks. BSM includes branching, solving, and merging modules to plan, crack, and combine sub-tasks. Applied to LLM response evaluation and constrained text generation with models like Vicuna, LLaMA-2-chat, and GPT-4, BSM boosts human-LLM agreement, reduces biases, and enables LLaMA-2-chat…