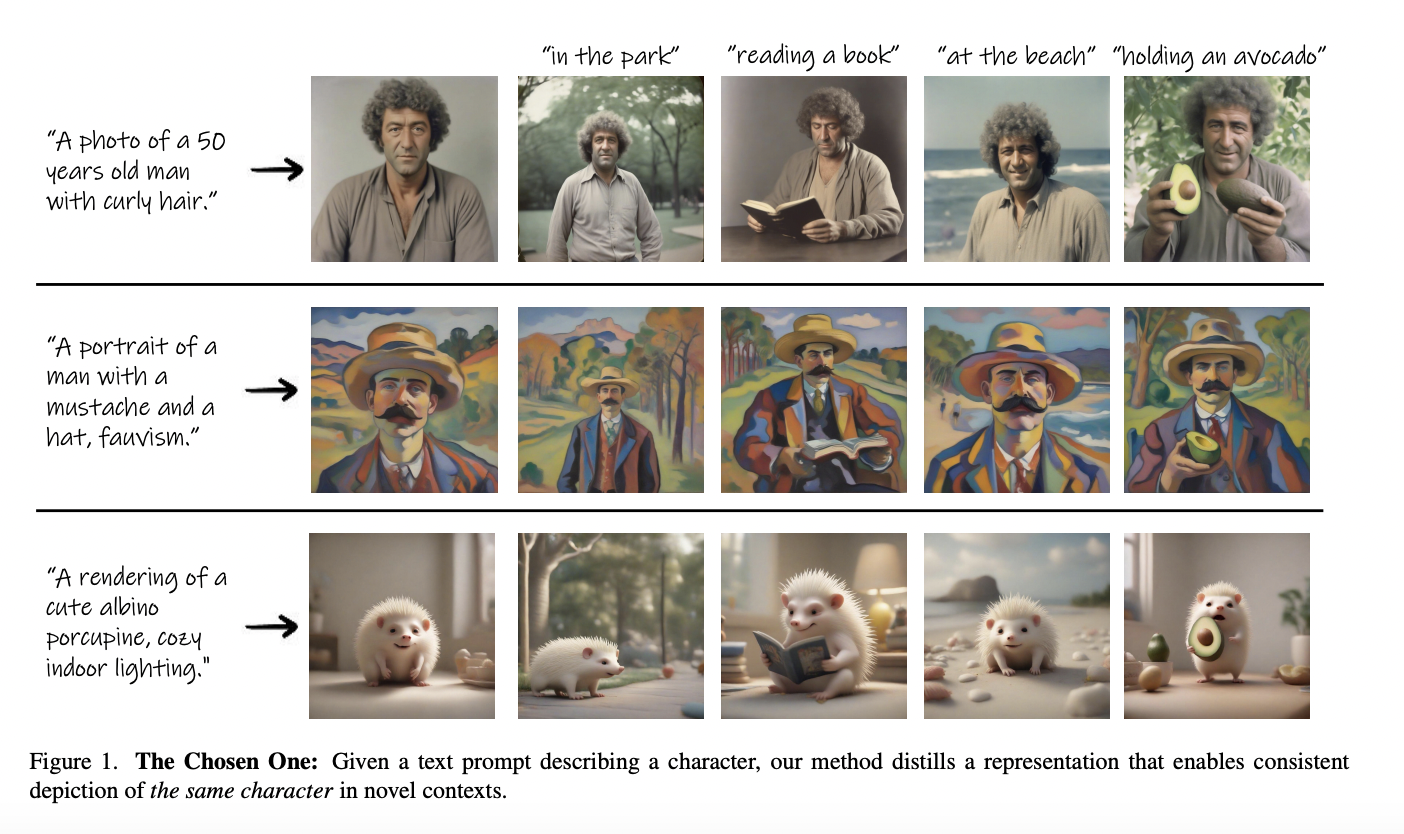

A key component of many creative projects is the capacity of the created visual content to remain consistent across different situations, as seen in Figure 1. These include drawing book illustrations, building brands, making comics, presentations, websites, and more. Establishing brand identification, enabling narrative, improving communication, and fostering emotional connection all depend on this consistency. This study intends to address the problem of text-to-image generative models’ inability to generate images consistently despite their increasingly amazing capabilities.

They specifically discuss the challenge of consistent character generation, in which they derive a representation that allows them to generate consistent portrayals of the same character in new circumstances, given an input text prompt specifying a nature. Even though they discuss characters frequently in this paper, their work is relevant to general visual topics. Think of an illustrator creating a Plasticine cat figure, for instance. Enabling a prompt that describes the character to be used with a cutting-edge text-to-image model yields a range of inconsistent results, as shown in Figure 2. On the other hand, our study demonstrates how to condense a dependable depiction of the cat (2nd row), which may subsequently be applied to portray the same character in various circumstances.

An array of ad hoc solutions has already been born out of the necessity for consistent character creation and the broad appeal of text-to-image generative models. These include employing visual variants and manually sorting them according to resemblance or utilizing celebrity names as prompts to create consistent individuals. Unlike these haphazard, labor-intensive methods, they provide a completely automated, systematic strategy for reliable character creation. The scholarly works that deal with personalization and narrative development are the ones that are most directly tied to their location. A few of these techniques take many user-supplied photos and create a representation of a specific character. Others cannot depend on the textual inversion of an already-existing human face portrayal or generalize to new characters outside the training set.

In this study, researchers from Google Research, The Hebrew University of Jerusalem, Tel Aviv University, and Reichman University contend that producing a consistent character is often more important than visually replicating a certain appearance in many applications. As a result, they tackle a novel context in which their goal is to automatically extract a coherent depiction of a persona that need only adhere to one natural language description. Their approach allows for creating a novel, consistent character that does not necessarily need to mirror any current visual portrayal because it does not require any photos of the target character as input. Their fully automated approach to the consistent character generation challenge is predicated on the idea that groups of pictures with common traits would be present in an adequately large set of created images for a given prompt.

It is possible to derive a representation from such a cluster that encapsulates the “common ground” amongst its pictures. They can improve the consistency of the output graphics while adhering to the original input prompt by repeating the procedure with this representation. First, they use a pre-trained feature extractor to create a gallery of images based on the given language prompt, and then they embed those images in an Euclidean space. They then group these embeddings into clusters and select the most unified collection as input for a customization technique that looks for a consistent identity. The next gallery of photos, which still depicts the input prompt but should show better consistency, is then created using the generated model.

Iteratively repeating this technique continues till convergence. They perform user research and objectively and qualitatively evaluate their strategy against many baselines. Lastly, they provide several methods of application. To summarize, their contributions consist of three main parts:

- They describe the job of consistent character development.

- They provide a unique approach to this work.

- They conduct user research and quantitative and qualitative evaluation of their technique to show its efficacy.

Check out the Paper and Project Page. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|

AI|AI|AI|AI|AI|AI|AI||AI|AI|AI|AI|AI|AI|AI||AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.