Compared to their supervised counterparts, which may be trained with millions of labeled examples, Large Language Models (LLMs) like GPT-3 and PaLM have shown impressive performance on various natural language tasks, even in the zero-shot setting. However, utilizing LLMs to solve the basic text ranking problem has had mixed results. Existing findings often perform noticeably worse than trained baseline rankers. The lone exception is a new strategy that relies on the massive, black box, and commercial GPT-4 system.

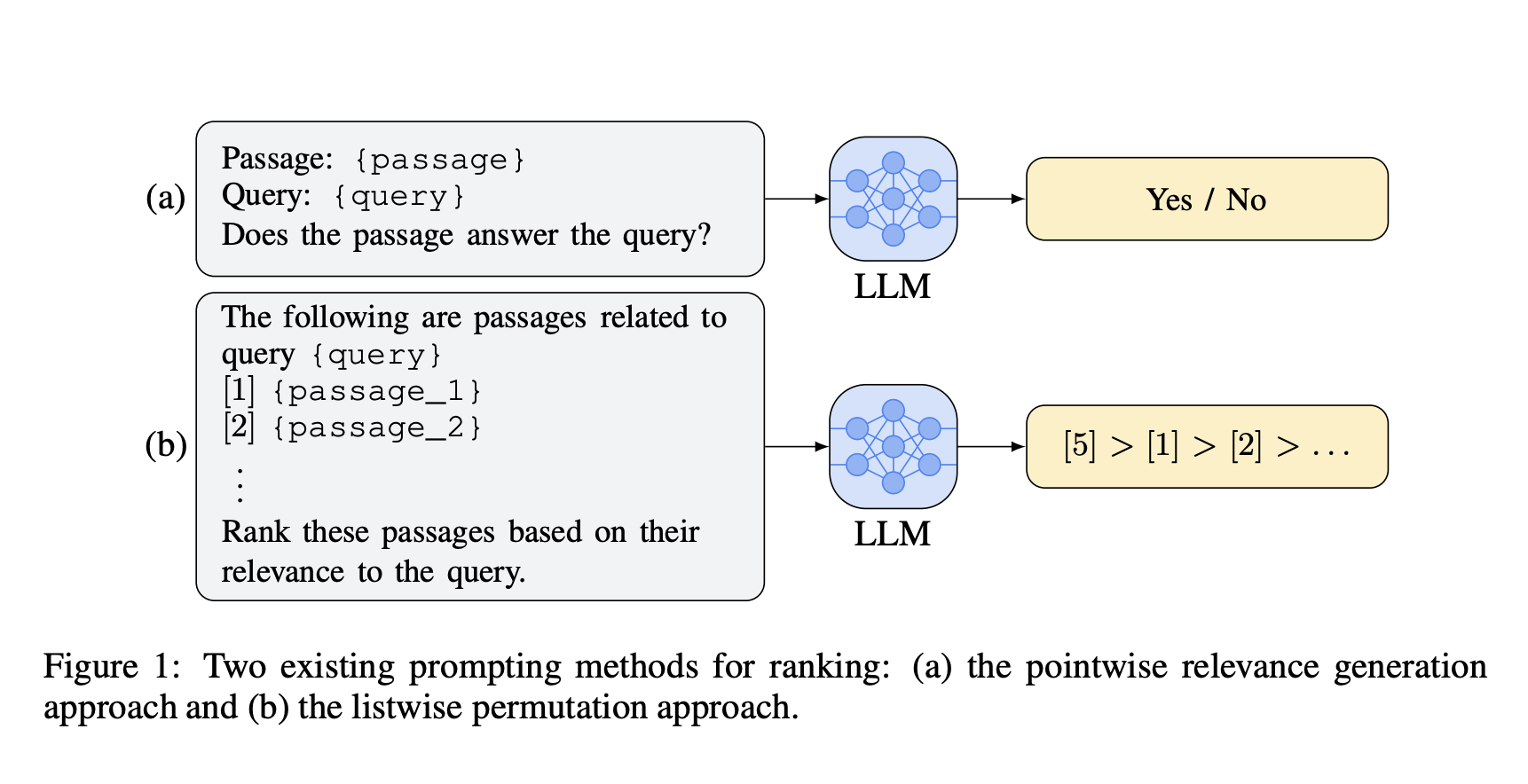

They argue that relying on such black box systems is not ideal for academic researchers due to significant cost constraints and access limitations to these systems. However, they do acknowledge the value of such explorations in demonstrating the capability of LLMs for ranking tasks. Ranking metrics can drop by over 50% when the input document order changes. In this study, they first explain why LLMs struggle with ranking problems when using the pointwise and listwise formulations of the current approaches. Since generation-only LLM APIs (like GPT-4) do not enable this, ranking for pointwise techniques necessitates LLMs to produce calibrated prediction probabilities before sorting, which is known to be exceedingly challenging.

LLMs frequently provide inconsistent or pointless outputs, even with instructions that seem extremely obvious to humans for listwise techniques. Empirically, they discover that listwise ranking prompts from prior work provide results on medium-sized LLMs that are entirely meaningless. These findings demonstrate that current, widely used LLMs need to comprehend ranking tasks, possibly due to their pre-training and fine-tuning techniques’ lack of ranking awareness. To considerably reduce task complexity for LLMs and address the calibration issue, researchers from Google Research propose the pairwise ranking prompting (PRP) paradigm, which employs the query and a pair of documents as the prompt for rating tasks. PRP is founded on a straightforward prompt architecture and offers both generation and scoring LLMs APIs by default.

They discuss several PRP variations to answer concerns about efficiency. PRP results are the first in the literature to use moderate-sized, open-sourced LLMs on traditional benchmark datasets to achieve state-of-the-art ranking performance. On the TREC-DL2020, PRP based on the 20B parameter FLAN-UL2 model exceeds the prior best method in the literature, based on the black box commercial GPT-4 with (an estimated) 50X model size, by more than 5% at NDCG@1. On TREC-DL2019, PRP can beat current solutions, such as InstructGPT, which has 175B parameters, by over 10% for practically all ranking measures, but it only performs worse than the GPT-4 solution on the NDCG@5 and NDCG@10 metrics. Additionally, they present competitive outcomes using FLAN-T5 models with 3B and 13B parameters to illustrate the effectiveness and applicability of PRP.

They also review PRP’s additional advantages, such as its support for LLM APIs for scoring and generation and its insensitivity to input orders. In conclusion, this work makes three contributions:

• They demonstrate pairwise ranking prompting works well for zero-shot ranking using LLMs for the first time. Their findings are based on moderate-sized, open-sourced LLMs, compared with existing systems that employ black box, commercial, and considerably bigger models.

• It can produce state-of-the-art ranking performance using straightforward prompting and scoring mechanisms. Future studies in this area will be made more accessible by the discovery.

• While achieving linear complexity, they examine several efficiency enhancements and demonstrate good empirical performance.

Check out the Paper. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

- Aragon: Get stunning professional headshots effortlessly with Aragon.

- StoryBird AI: Create personalized stories using AI

- Taplio: Transform your LinkedIn presence with Taplio’s AI-powered platform

- Otter AI: Get a meeting assistant that records audio, writes notes, automatically captures slides, and generates summaries.

- Notion: Notion AI is a robust generative AI tool that assists users with tasks like note summarization

- tinyEinstein: tinyEinstein is an AI Marketing manager that helps you grow your Shopify store 10x faster with almost zero time investment from you.

- AdCreative.ai: Boost your advertising and social media game with AdCreative.ai – the ultimate Artificial Intelligence solution.

- SaneBox: SaneBox’s powerful AI automatically organizes your email for you, and the other smart tools ensure your email habits are more efficient than you can imagine

- Motion: Motion is a clever tool that uses AI to create daily schedules that account for your meetings, tasks, and projects.

🚀 Check Out 100’s AI Tools in AI Tools Club

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.