Hair is one of the most remarkable features of the human body, impressing with its dynamic qualities that bring scenes to life. Studies have consistently demonstrated that dynamic elements have a stronger appeal and fascination than static images. Social media platforms like TikTok and Instagram witness the daily sharing of vast portrait photos as people aspire to make their pictures both appealing and artistically captivating. This drive fuels researchers’ exploration into the realm of animating human hair within still images, aiming to offer a vivid, aesthetically pleasing, and beautiful viewing experience.

Recent advancements in the field have introduced methods to infuse still images with dynamic elements, animating fluid substances such as water, smoke, and fire within the frame. Yet, these approaches have largely overlooked the intricate nature of human hair in real-life photographs. This article focuses on the artistic transformation of human hair within portrait photography, which involves translating the picture into a cinemagraph.

A cinemagraph represents an innovative short video format that enjoys favor among professional photographers, advertisers, and artists. It finds utility in various digital mediums, including digital advertisements, social media posts, and landing pages. The fascination for cinemagraphs lies in their ability to merge the strengths of still images and videos. Certain areas within a cinemagraph feature subtle, repetitive motions in a short loop, while the remainder remains static. This contrast between stationary and moving elements effectively captivates the viewer’s attention.

Through the transformation of a portrait photo into a cinemagraph, complete with subtle hair motions, the idea is to enhance the photo’s allure without detracting from the static content, creating a more compelling and engaging visual experience.

Existing techniques and commercial software have been developed to generate high-fidelity cinemagraphs from input videos by selectively freezing certain video regions. Unfortunately, these tools are not suitable for processing still images. In contrast, there has been a growing interest in still-image animation. Most of these approaches have focused on animating fluid elements such as clouds, water, and smoke. However, the dynamic behavior of hair, composed of fibrous materials, presents a distinctive challenge compared to fluid elements. Unlike fluid element animation, which has received extensive attention, the animation of human hair in real portrait photos has been relatively unexplored.

Animating hair in a static portrait photo is challenging due to the intricate complexity of hair structures and dynamics. Unlike the smooth surfaces of the human body or face, hair comprises hundreds of thousands of individual components, resulting in complex and non-uniform structures. This complexity leads to intricate motion patterns within the hair, including interactions with the head. While there are specialized techniques for modeling hair, such as using dense camera arrays and high-speed cameras, they are often costly and time-consuming, limiting their practicality for real-world hair animation.

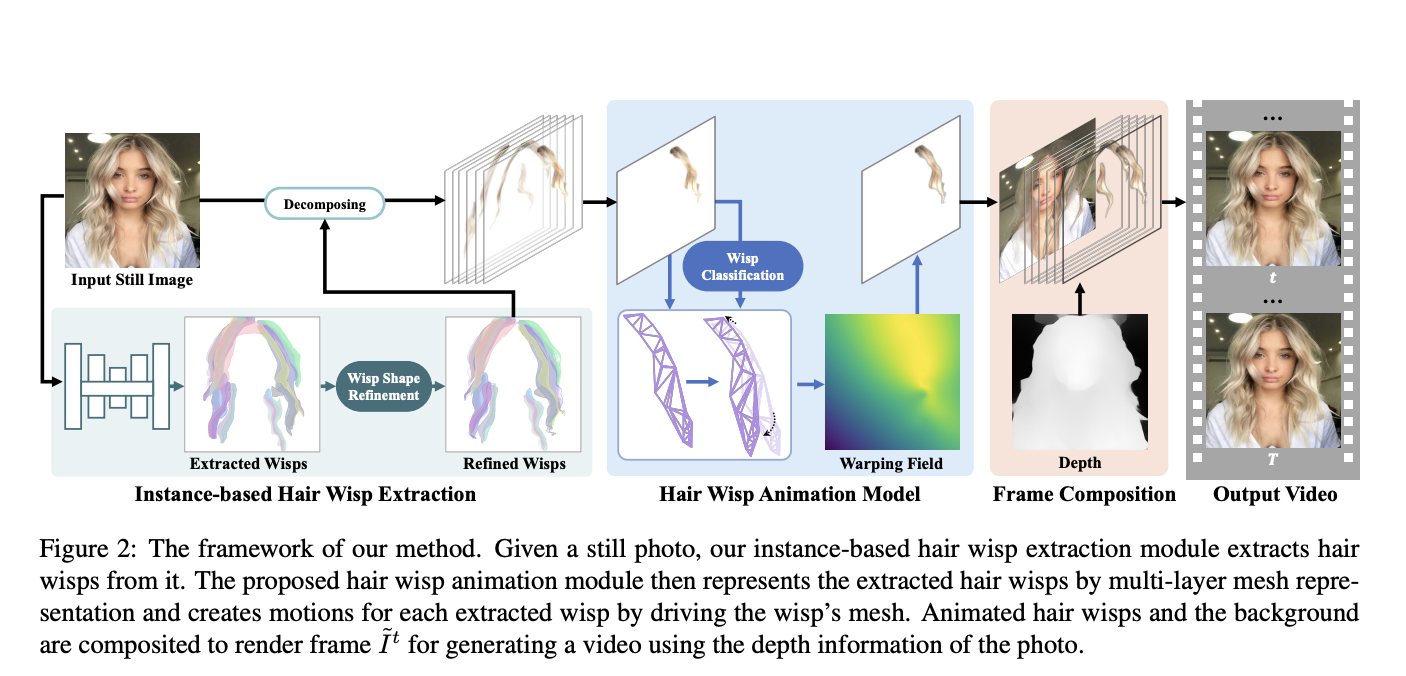

The paper presented in this article introduces a novel AI method for automatically animating hair within a static portrait photo, eliminating the need for user intervention or complex hardware setups. The insight behind this approach lies in the human visual system’s reduced sensitivity to individual hair strands and their motions in real portrait videos, compared to synthetic strands within a digitalized human in a virtual environment. The proposed solution is to animate “hair wisps” instead of individual strands, creating a visually pleasing viewing experience. To achieve this, the paper introduces a hair wisp animation module, enabling an efficient and automated solution. An overview of this framework is illustrated below.

The key challenge in this context is how to extract these hair wisps. While related work, such as hair modeling, has focused on hair segmentation, these approaches primarily target the extraction of the entire hair region, which differs from the objective. To extract meaningful hair wisps, the researchers innovatively frame hair wisp extraction as an instance segmentation problem, where an individual segment within a still image corresponds to a hair wisp. By adopting this problem definition, the researchers leverage instance segmentation networks to facilitate the extraction of hair wisps. This not only simplifies the hair wisp extraction problem but also enables the use of advanced networks for effective extraction. Additionally, the paper presents the creation of a hair wisp dataset containing real portrait photos to train the networks, along with a semi-annotation scheme to produce ground-truth annotations for the identified hair wisps. Some sample results from the paper are reported in the figure below compared with state-of-the-art techniques.

This was the summary of a novel AI framework designed to transform still portraits into cinemagraphs by animating hair wisps with pleasing motions without noticeable artifacts. If you are interested and want to learn more about it, please feel free to refer to the links cited below.

vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre|vre

Check out the Paper and Project Page. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on WhatsApp. Join our AI Channel on Whatsapp..

![]()

Daniele Lorenzi received his M.Sc. in ICT for Internet and Multimedia Engineering in 2021 from the University of Padua, Italy. He is a Ph.D. candidate at the Institute of Information Technology (ITEC) at the Alpen-Adria-Universität (AAU) Klagenfurt. He is currently working in the Christian Doppler Laboratory ATHENA and his research interests include adaptive video streaming, immersive media, machine learning, and QoS/QoE evaluation.