Apple Researchers Introduce A Groundbreaking Artificial Intelligence Approach to Dense 3D Reconstruction from Dynamically-Posed RGB Images

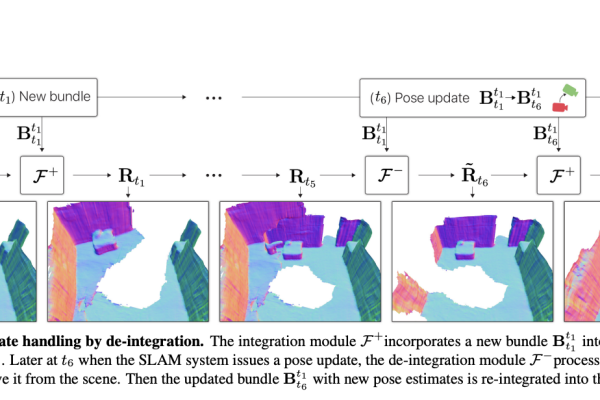

With learnt priors, RGB-only reconstruction with a monocular camera has made significant strides toward resolving the issues of low-texture areas and the inherent ambiguity of image-based reconstruction. Practical solutions for real-time execution have garnered considerable attention, as they are essential for interactive applications on mobile devices. Nevertheless, a crucial prerequisite yet to be considered…