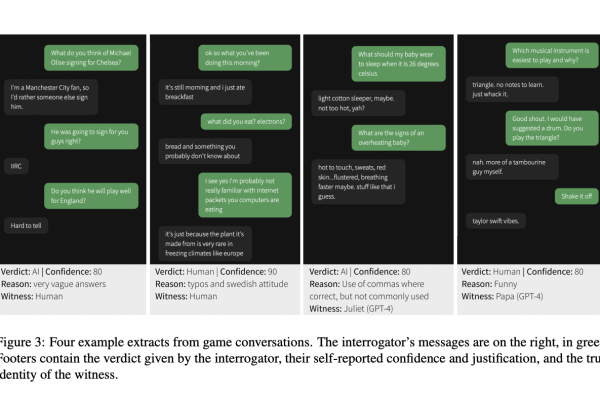

UCSD Researchers Evaluate GPT-4’s Performance in a Turing Test: Unveiling the Dynamics of Human-like Deception and Communication Strategies

The GPT-4 was tested using a public Turing test on the internet by a group of researchers from UCSD. The best performing GPT-4 prompt was successful in 41% of games, which was better than the baselines given by ELIZA (27%), GPT-3.5 (14%), and random chance (63%), but it still needs to be quite there….