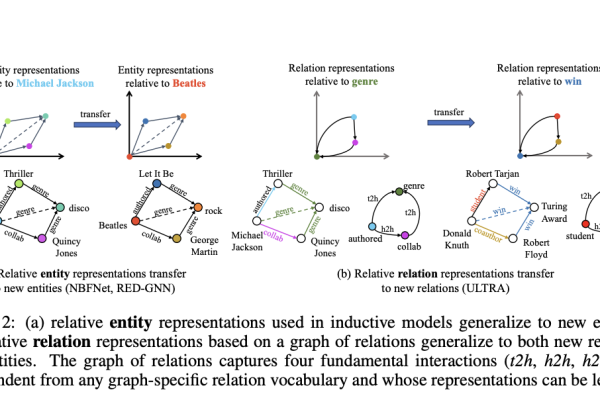

Meet ULTRA: A Pre-Trained Foundation Model for Knowledge Graph Reasoning that Works on Any Graph and Outperforms Supervised SOTA Models on 50+ Graphs

ULTRA is a model designed to learn universal and transferable graph representations for knowledge graphs (KGs). ULTRA creates relational illustrations by conditioning them on interactions, enabling it to generalise to any KG with different entity and relation vocabularies. A pre-trained ULTRA model exhibits impressive zero-shot inductive inference on new graphs in link prediction experiments,…