Enhancing Reinforcement Learning Explainability with Temporal Reward Decomposition

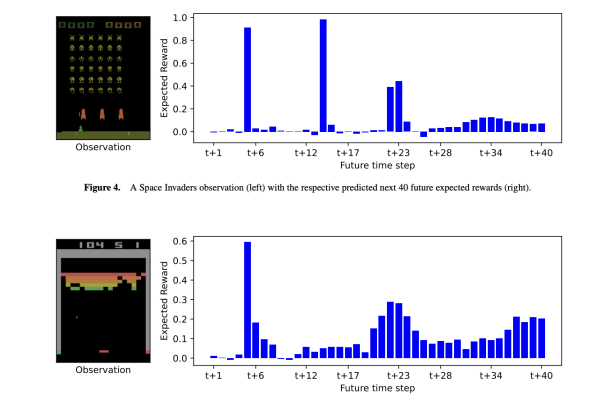

Future reward estimation is crucial in RL as it predicts the cumulative rewards an agent might receive, typically through Q-value or state-value functions. However, these scalar outputs lack detail about when or what specific rewards the agent anticipates. This limitation is significant in applications where human collaboration and explainability are essential. For instance, in…