Google DeepMind’s researchers have developed SODA, an AI model that addresses the problem of encoding images into efficient latent representations. With SODA, seamless transitions between images and semantic attributes are made possible, allowing for interpolation and morphing across various image categories.

Diffusion models have revolutionized visual synthesis, excelling in diverse tasks like image, video, audio, and text synthesis, planning, and drug discovery. While prior studies focused on their generative capabilities, this study explores the underexplored realm of diffusion models’ representational capacity. The study comprehensively evaluates diffusion-based representation learning across various datasets and tasks, shedding light on their potential derived solely from images.

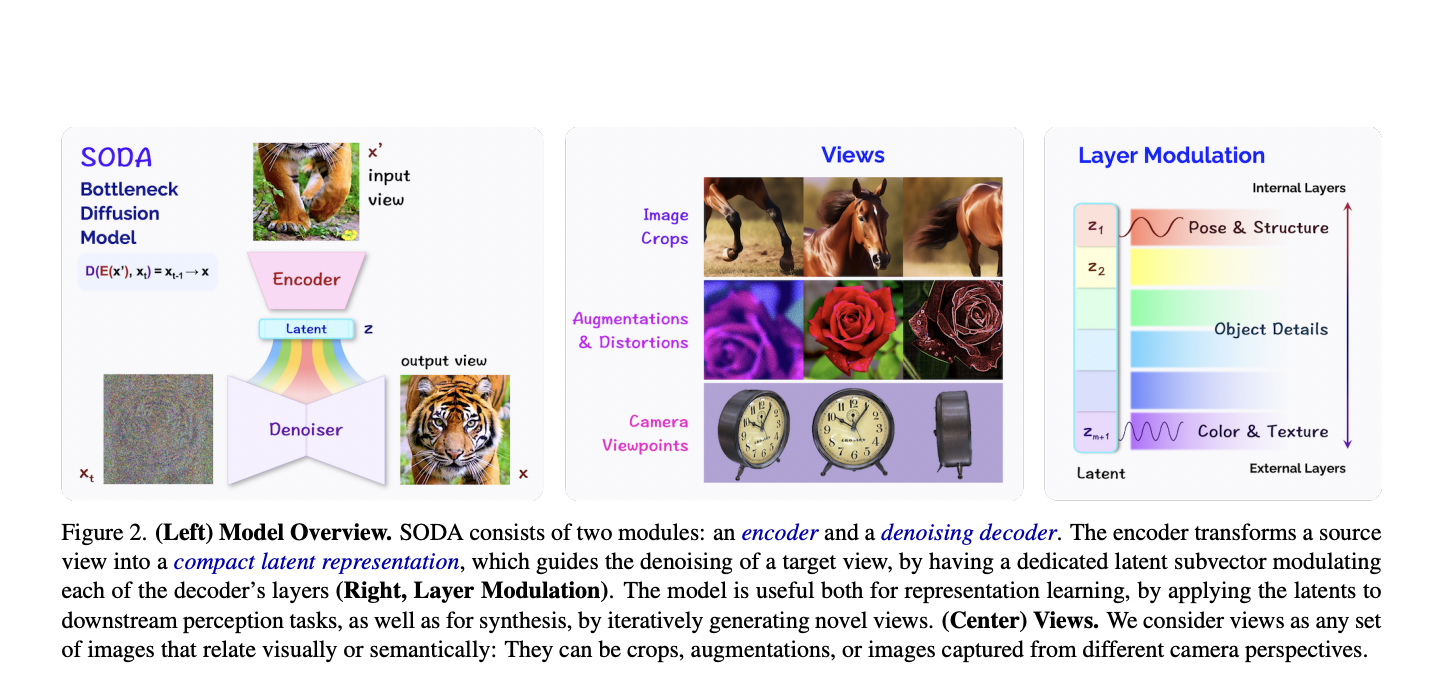

The proposed model emphasizes the importance of synthesis in learning and highlights the significant representational capacity of diffusion models. SODA is a self-supervised model incorporating an information bottleneck to achieve disentangled and informative representations. SODA showcases its strengths in classification, reconstruction, and synthesis tasks, including high-performance few-shot novel view generation and semantic trait controllability.

A SODA model utilizes an information bottleneck to create disentangled representations through self-supervised diffusion. This approach uses pre-training based on distribution to improve representation learning, resulting in strong performance in classification and novel view synthesis tasks. SODA’s capabilities have been tested by extensively evaluating diverse datasets, including robust performance on ImageNet.

SODA has been proven to excel in representation learning with impressive results in classification, disentanglement, reconstruction, and novel view synthesis. It has been found to improve disentanglement metrics significantly compared to variational methods. In ImageNet linear-probe classification, SODA outperforms other discriminative models and demonstrates robustness against data augmentations. Its versatility is evident in generating novel views and seamless attribute transitions. Through empirical study, SODA has been established as an effective, robust, and versatile approach for representation learning, supported by detailed analyses, evaluation metrics, and comparisons with other models.

In conclusion, SODA demonstrates remarkable proficiency in representation learning, producing robust semantic representations for various tasks, including classification, reconstruction, editing, and synthesis. It employs an information bottleneck to focus on essential image qualities and outperforms variational methods in disentanglement metrics. SODA’s versatility is evident in its ability to generate novel views, transition semantic attributes, and handle richer conditional information such as camera perspective.

As future work, it would be valuable to delve deeper into the field of SODA by exploring dynamic compositional scenes of 3D datasets and bridging the gap between novel view synthesis and self-supervised learning. Further investigation is needed regarding model structure, implementation, and evaluation details, such as preliminaries of diffusion models, hyperparameters, training techniques, and sampling methods. Conducting ablation and variation studies is recommended to understand design choices better and explore alternative mechanisms, cross-attention, and layer-wise modulation. Doing so can enhance performance in various tasks like 3D novel view synthesis, image editing, reconstruction, and representation learning.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|

DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|

DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV|DEV

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.