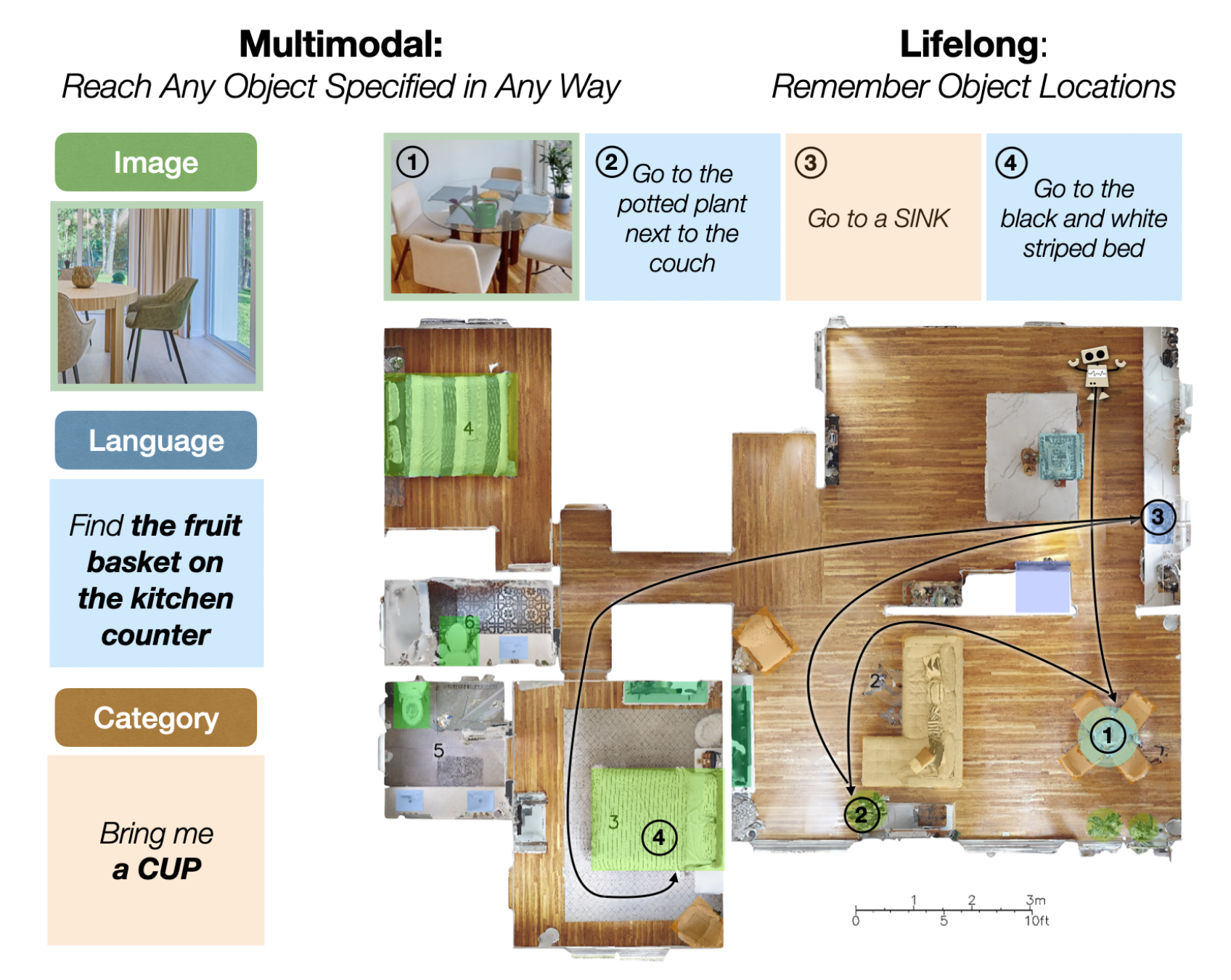

A team of researchers from the University of Illinois Urbana-Champaign, Carnegie Mellon University, Georgia Institute of Technology, University of California Berkeley, Meta AI Research, and Mistral AI has developed a universal navigation system called GO To Any Thing (GOAT). This system is designed for extended autonomous operation in home and warehouse environments. GOAT is a multimodal system that can interpret goals from category labels, target images, and language descriptions. It is a lifelong system that benefits from past experiences. GOAT is platform-agnostic and adaptable to various robot embodiments.

GOAT, a versatile navigation system for mobile robots, is adept at autonomous navigation in diverse environments using category labels, target images, and language descriptions. GOAT employs depth estimates and semantic segmentation to create a 3D semantic voxel map for accurate object instance detection and memory storage. The semantic map facilitates spatial representation, tracking object instances, obstacles, and explored areas.

GOAT is a mobile robot system inspired by animal and human navigation insights. GOAT, a universal navigation system, operates autonomously in diverse environments, executing tasks based on human input. Multimodal, lifelong, and platform agnostic, GOAT uses category labels, target images, and language descriptions for goal specification. The study evaluates GOAT’s performance in reaching unseen multimodal object instances and highlights its superiority, leveraging SuperGLUE-based image keypoint matching over CLIP feature matching in previous methods.

GOAT, a universal navigation system, employs a modular design and an instance-aware semantic memory for multimodal navigation based on images and language descriptions. The plan, platform-agnostic and capable of lifelong learning, demonstrates its capabilities through large-scale real-world experiments in homes. Utilizing metrics like Success weighted by Path Length, GOAT’s performance is evaluated without pre-computed maps. The agent employs global and local policies, using the Fast Marching Method for path planning and point navigation controllers to reach waypoints along the path.

In experimental trials across nine homes, GOAT, a universal navigation system, has achieved an 83% success rate, surpassing previous methods by 32%. Its success rate improved from 60% on the first goal to 90% after exploration, showcasing its adaptability. GOAT seamlessly handled downstream tasks like pick and place and social navigation. Qualitative experiments demonstrated GOAT’s deployment on Boston Dynamics Spot and Hello Robot Stretch robots. Large-scale quantitative experiments on Spot in real-world homes showcased GOAT’s superior performance compared to three baselines, outperforming in matching instances and efficient navigation.

An outstanding multimodal and platform-agnostic design allows goal specification through diverse means, including category labels, target images, and language descriptions. The modular architecture and instance-aware semantic memory distinguish between instances of the same category for effective navigation. Evaluated in large-scale experiments without pre-computed maps, GOAT exhibits versatility, extending its capabilities to tasks like pick and place and social navigation.

The future trajectory for GOAT involves a comprehensive exploration of its performance across diverse environments and scenarios to gauge its generalizability and robustness. Investigations will target the enhancement of the matching threshold to address challenges during prospecting. Subsampling instances based on the goal category will be further explored for performance improvement. GOAT’s ongoing development includes refining global and local policies and potentially integrating additional techniques for more efficient navigation. Extensive real-world evaluation will encompass different robots and tasks to validate GOAT’s versatility. Further exploration can extend GOAT’s applicability beyond navigation to domains like object recognition, manipulation, and interaction.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|

VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR||VR|VR|VR|VR|

VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|

VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR|VR

Hello, My name is Adnan Hassan. I am a consulting intern at Marktechpost and soon to be a management trainee at American Express. I am currently pursuing a dual degree at the Indian Institute of Technology, Kharagpur. I am passionate about technology and want to create new products that make a difference.