Multimodal pre-training advancements address diverse tasks, exemplified by models like LXMERT, UNITER, VinVL, Oscar, VilBert, and VLP. Models such as FLAN-T5, Vicuna, LLaVA, and more enhance instruction-following capabilities. Others like Flamingo, OpenFlamingo, Otter, and MetaVL explore in-context learning. While benchmarks like VQA focus on perception, MMMU stands out by demanding expert-level knowledge and deliberate reasoning in college-level problems. Unique features include comprehensive knowledge coverage, varied image formats, and a distinctive emphasis on subject-specific reasoning, setting it apart from existing benchmarks.

The MMMU benchmark is introduced by researchers from various organizations like IN.AI Research, University of Waterloo, The Ohio State University, Independent, Carnegie Mellon University, University of Victoria, and Princeton University, featuring diverse college-level problems spanning various disciplines. Emphasizing expert-level perception and reasoning, it is a benchmark that exposes substantial challenges for current models.

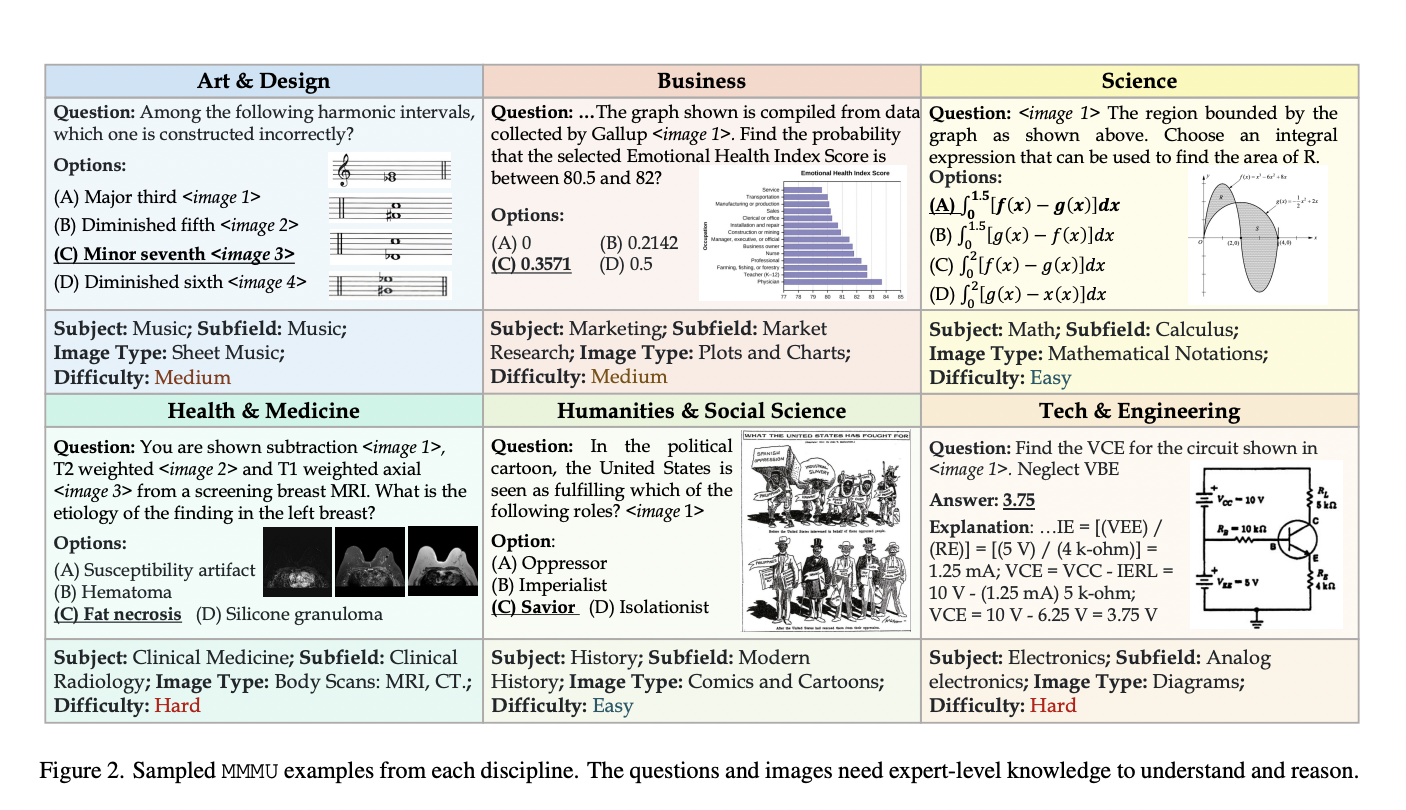

The research highlights the necessity for benchmarks to assess progress towards Expert AGI, surpassing human capabilities. Current standards, like MMLU and AGIEval, focus on text, needing more multimodal challenges. Large Multimodal Models (LMMs) show promise, but existing benchmarks need expert-level domain knowledge. The MMMU benchmark is introduced to bridge this gap, featuring complex college-level problems with diverse image types and interleaved text. It demands expert-level perception and reasoning, presenting a challenging evaluation for LMMs striving for advanced AI capabilities.

The MMMU benchmark, designed for Expert AGI evaluation, comprises 11.5K college-level problems spanning six disciplines and 30 subjects. The data collection involves selecting topics based on visual inputs, engaging student annotators to gather multimodal questions, and implementing quality control. Multiple models, including LLMs and LMMs, undergo evaluation on MMMU in a zero-shot setting, testing their ability to generate precise answers without fine-tuning or few-shot demonstrations.

The MMMU benchmark proves challenging for models, as GPT-4V achieves only 55.7% accuracy, indicating significant room for improvement. Expert-level perception and reasoning demands make it a rigorous evaluation for LLMs and LMMs. Error analysis pinpoints challenges in visual perception, knowledge representation, reasoning, and multimodal understanding, suggesting areas for further research. Covering college-level knowledge with 30 diverse image formats, MMMU underscores the importance of enriching training datasets with domain-specific knowledge to enhance accuracy and applicability in specialized fields for foundation models.

In conclusion, Creating the MMMU benchmark represents a significant advancement in evaluating LMMs for Expert AGI. This benchmark challenges current models to assess basic perceptual skills and complex reasoning, contributing to understanding of progress in Expert AGI development. It emphasizes expert-level performance and reasoning capabilities, highlighting areas for further research in visual perception, knowledge representation, reasoning, and multimodal understanding. Enriching training datasets with domain-specific knowledge is recommended for improved accuracy and applicability in specialized fields.

GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.