Large Language Models (LLMs) have proven to be really effective in the fields of Natural Language Processing (NLP) and Natural Language Understanding (NLU). Famous LLMs like GPT, BERT, PaLM, etc., are being used by researchers to provide solutions in every domain ranging from education and social media to finance and healthcare. Being trained on massive amounts of datasets, these LLMs capture a vast amount of knowledge. LLMs have displayed ability in question-answering through tuning, content generation, text summarization, translation of languages, etc. Though LLMs have shown impressive capabilities lately, there have been difficulties in producing plausible and ungrounded information without any hallucinations and weakness in numerical reasoning.

Recent research has shown augmenting LLMs with external tools, including retrieval augmentation, math tools, and code interpreters, is a better approach to overcoming the above challenges. Evaluating the effectiveness of these external tools poses difficulties, as current evaluation methodologies need help to determine whether the model is merely recalling pre-trained information or genuinely utilizing external tools for problem-solving. To overcome these limitations, a team of researchers from the College of Computing, Georgia Institute of Technology, and Atlanta, GA, have introduced ToolQA, a benchmark for question-answering that assesses the proficiency of LLMs in using outside resources.

ToolQA consists of data from eight domains and defines 13 types of tools that can acquire information from external reference corpora. A question, an answer, reference corpora, and a list of available tools are all included in each instance of ToolQA. The uniqueness of ToolQA lies in the fact that all questions can only be answered by using appropriate tools to extract information from the reference corpus, which thereby minimizes the possibility of LLMs answering questions solely based on internal knowledge and allows for a faithful evaluation of their tool-utilization abilities.

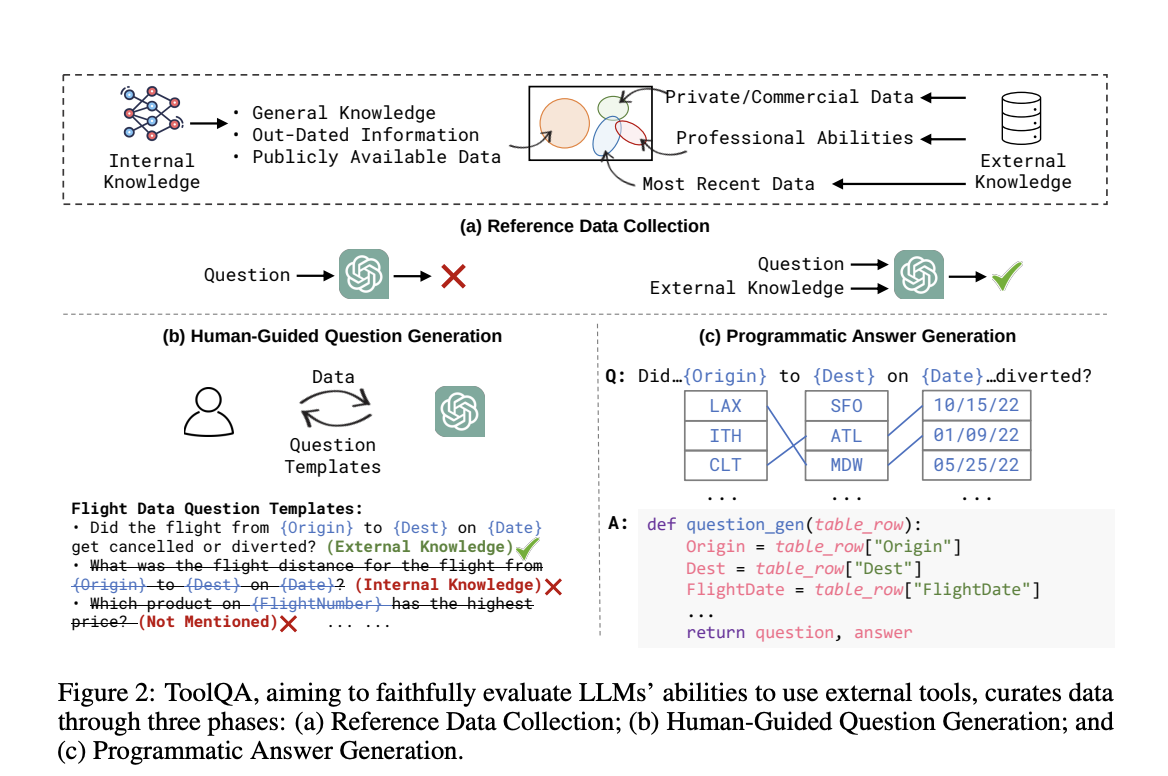

ToolQA involves three automated phases: Reference Data Collection, Human-guided Question Generation, and Programmatic Answer Generation. In the first phase, various types of public corpora, including text, tables, and graphs, are gathered from different domains and serve as the reference corpora for tool-based question answering. In the second phase, questions are created that can only be resolved with the aid of the tools rather than the reference corpora. This is accomplished via a template-based question-generating method, which also involves question instantiation with tool attributes and human-guided template production and validation. The third phase produces accurate answers for the generated questions, operators corresponding to the tools are implemented, and answers are obtained programmatically from the reference corpora.

The team conducted experiments using both standard LLMs and tool-augmented LLMs to answer questions in ToolQA. The results showed that LLMs that only rely on internal knowledge, such as ChatGPT and Chain-of-thoughts prompting, have low success rates, about 5% for easy questions and 2% for hard ones. On the other hand, tool-augmented LLMs like Chameleon and ReAct performed better by using external tools, with the best performance achieved by tool-augmented LLMs being 43.15% for easy questions and 8.2% for hard questions.

The outcomes and error analysis show that ToolQA is a difficult benchmark for current tool-augmented LLM approaches, particularly for difficult problems that call for more intricate tool compositional reasoning. It is a promising addition to the developments in AI.

Check Out the Paper and Github Repo. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

🚀 Check Out 100’s AI Tools in AI Tools Club

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.

She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.