Multimodal Large Language Models (MLLMs) have demonstrated success as a general-purpose interface in various activities, including language, vision, and vision-language tasks. Under zero-shot and few-shot conditions, MLLMs can perceive generic modalities such as texts, pictures, and audio and produce answers using free-form texts. In this study, they enable multimodal big language models to ground themselves. For vision-language activities, grounding capability can offer a more practical and effective human-AI interface. The model can interpret that picture region with its geographical coordinates, allowing the user to point directly to the item or region in the image rather than entering lengthy text descriptions to refer to it.

The model’s grounding feature also enables it to provide visual responses (i.e., bounding boxes), which can assist other vision-language tasks like understanding referring expressions. Compared to responses that are just text-based, visual responses are more precise and clear up coreference ambiguity. The resulting free-form text response’s grounding capacity may connect noun phrases and referencing terms to the picture areas to produce more accurate, informative, and thorough responses. Researcjers from Microsoft Research introduce KOSMOS-2, a multimodal big language model built on KOSMOS-1 with grounding capabilities. The next-word prediction task is used to train the causal language model KOSMOS-2 based on Transformer.

They build a web-scale dataset of grounded image-text pairings and integrate it with the multimodal corpora in KOSMOS-1 to train the model to use the grounding potential fully. A subset of image-text pairings from LAION-2B and COYO-700M is the foundation for the grounded image-text pairs. They provide a pipeline to extract and connect text spans from the caption, such as noun phrases and referencing expressions, to the spatial positions (such as bounding boxes) of the respective objects or regions in the picture. They translate the bounding box’s geographical coordinates into a string of location tokens, which are subsequently added after the corresponding text spans. The data format acts as a “hyperlink” to link the image’s elements to the caption.

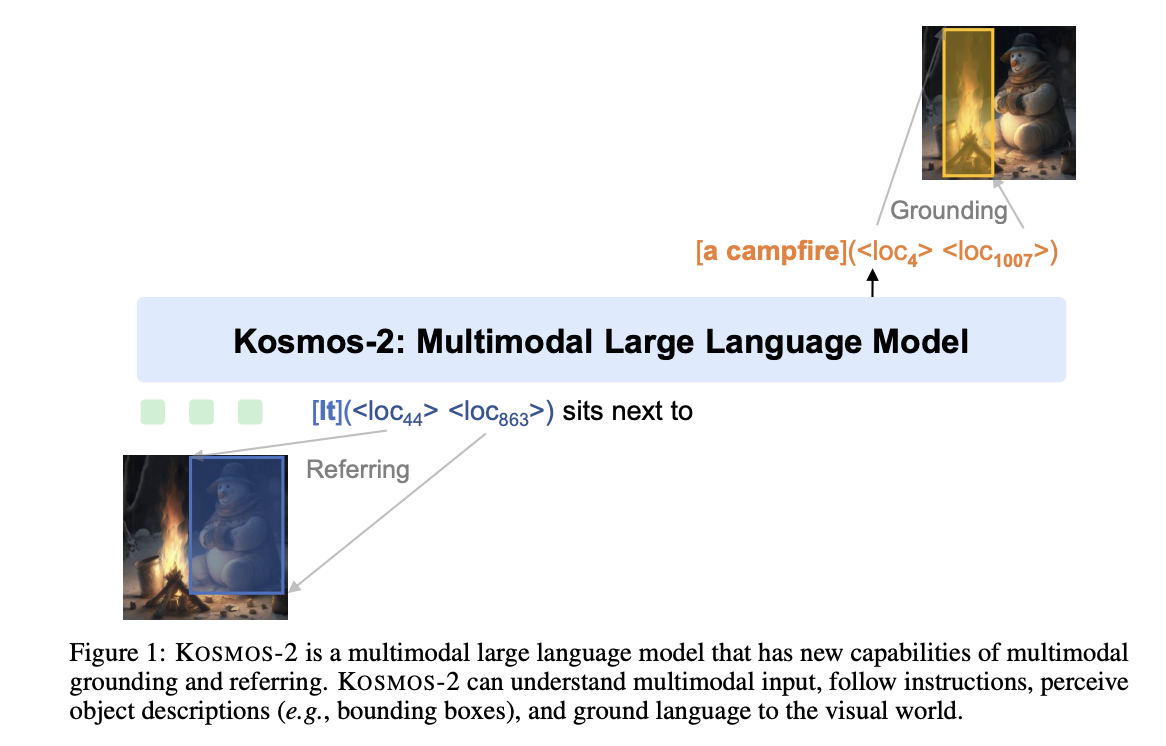

The results of the experiments show that KOSMOS-2 not only performs admirably on the grounding tasks (phrase grounding and referring expression comprehension) and referring tasks (referring expression generation) but also performs competitively on the language and vision-language tasks evaluated in KOSMOS-1. Figure 1 illustrates how including the grounding feature allows KOSMOS-2 to be utilized for additional downstream tasks, such as grounded picture captioning and grounded visual question answering. An online demo is available on GitHub.

Check Out The Paper and Github Link. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

🚀 Check Out 100’s AI Tools in AI Tools Club

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.