There has been rapid growth in the open-source landscape for Large Language Models (LLMs) after the release of the Llama3 model and its successor, Llama 2, by Meta in 2023. This release has led to the development of multiple innovative LLMs. These models have played an important role in this dynamic field by influencing natural language processing (NLP) significantly. This paper highlights the most influential open-source LLMs like Mistral’s sparse Mixture of Experts model Mixtral-8x7B, Alibaba Cloud’s multilingual Qwen1.5 series, Abacus AI’s Smaug, and 01.AI’s Yi models that focus on data quality.

The emergence of on-device AI models, such as LLMs has transformed the landscape of NLP, providing numerous benefits compared to traditional cloud-based methods. However, the true potential is seen by combining on-device AI with cloud-based models, resulting in a new idea called cloud-on-device collaboration. AI systems can achieve new heights of performance, scalability, and flexibility by combining the power of on-device and cloud-based models. By using both models together, computational resources can be allocated efficiently: lighter, private tasks are managed by on-device models, and cloud-based models take on heavier or more complex operations.

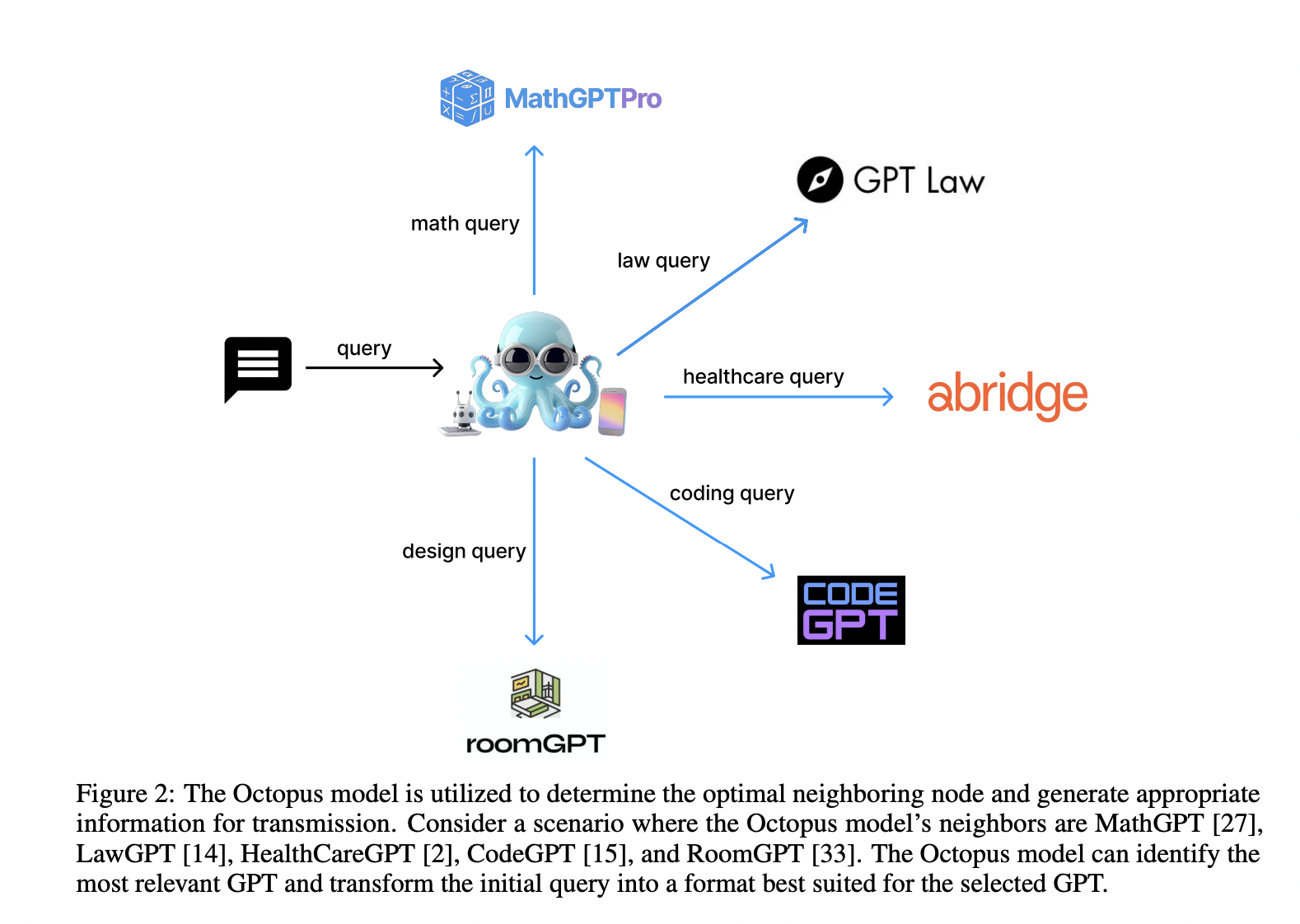

Researchers from Nexa AI introduce Octopus v4, a robust approach that utilizes functional tokens to integrate multiple open-source models, each optimized for specific tasks. Octopus v4 utilizes functional tokens to direct user queries efficiently toward the most suitable vertical model and optimally adjusts the query format for enhanced performance. Octopus v4, an upgraded version of its predecessors – Octopus v1, v2, and v3 models, shows outstanding performance in selection, parameter understanding, and query restructuring. Also, the Octopus model and functional tokens are used to describe the use of graphs as a flexible data structure that coordinates efficiently with various open-source models.

In the system architecture of a complex graph where each node represents a language model, utilizing multiple Octopus models for coordination, below are the components of this system:

- Worker node deployment: Each worker node represents a separate language model. Researchers utilized a serverless architecture for these nodes, specifically recommending Kubernetes for its robust autoscaling capabilities.

- Master node deployment: The master node can use a base model with less than 10B parameters. In this paper, the researchers used a 3B model during the experimentation.

- Communication: Worker and master nodes are distributed across multiple devices, allowing it for multiple units. Therefore, an internet connection is needed to transfer data between nodes.

In the thorough evaluation of the Octopus v4 system, its performance is compared with other useful models using the MMLU benchmark to prove its effectiveness. Two compact LMs: the 3B parameter Octopus v4, and another worker language model with up to 8B parameters, are utilized in this system. An example of the user query for this model is:

Query: Tell me the result of derivative of x^3 when x is 2?

Response: <nexa_4> (’Determine the derivative of the function f(x) = x^3 at the point where x equals 2, and interpret the result within the context of rate of change and tangent slope.’)

In conclusion, researchers from Nexa AI proposed Octopus v4, a robust approach that utilizes functional tokens to integrate multiple open-source models, each optimized for specific tasks. Also, the performance of the Octopus v4 system is compared with other renowned models using the MMLU benchmark to prove its effectiveness. For future work, researchers are planning to improve this framework by utilizing multiple vertical-specific models and including the advanced Octopus v4 models with multiagent capability.

1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

Sajjad Ansari is a final year undergraduate from IIT Kharagpur. As a Tech enthusiast, he delves into the practical applications of AI with a focus on understanding the impact of AI technologies and their real-world implications. He aims to articulate complex AI concepts in a clear and accessible manner.