Consistency models represent a category of generative models designed to generate high-quality data in a single step without relying on adversarial training. These models attain optimal sample quality by learning from pre-trained diffusion models and utilizing metrics like LPIPS (learning Perceptual Image Patch Similarity). The quality of consistency models is limited to the pre-trained diffusion model when distillation is used. Furthermore, the LPIPS application introduces unwanted bias into the evaluation process.

Consistency models do not require numerous sampling steps to generate high-quality samples compared to score-based diffusion models. It keeps the main benefits of diffusion models, such as the ability to trade computing power for multi-step sampling that improves sample quality. Additionally, it makes it possible to use a zero-shot strategy to undertake data alteration without any prior exposure.

These models use LPIPS and distillation, which is the process of removing knowledge from diffusion models that have already been trained. There is a drawback: incorporating LPIPS introduces undesired bias into the evaluation process, as distillation establishes a connection between the quality of consistency models and that of their original diffusion models.

In their publication “Techniques for Training Consistency Models,” the OpenAI research team introduced innovative methods that empower consistency models to learn directly from data. These methods outperform the performance of consistency distillation (CD) in producing high-quality samples while simultaneously alleviating the constraints associated with LPIPS.

Consistency distillation (CD) and consistency training have historically been the primary methods used to train consistency models (CT). Prior studies consistently show that CD tends to perform better than CT. But CD limits the sample quality that the consistency model can achieve by requiring the training of a unique diffusion model.

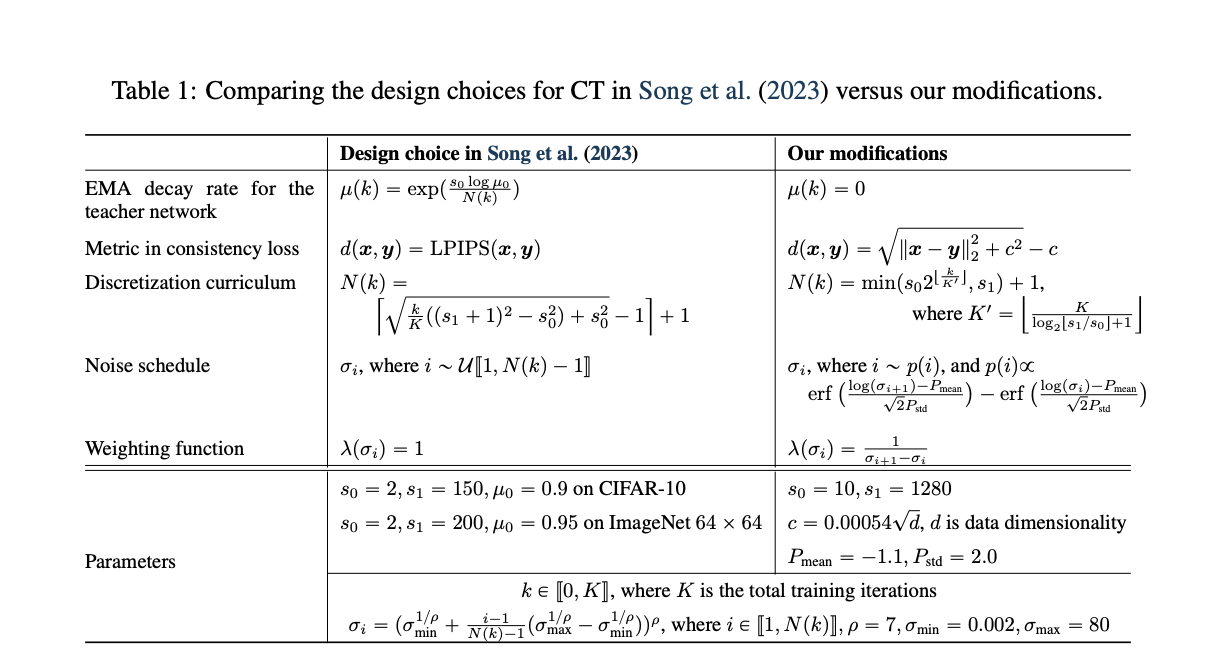

The researchers suggested to train models consistently by adding a lognormal noise schedule. They also recommend increasing the total discretization steps regularly during training. This study improves Contrastive Training (CT) to a level where it performs better than Consistency Distillation (CD). A combination of theoretical understanding and extensive experimentation on the CIFAR-10 dataset led to improvements in CT. The researchers extensively investigate the real-world effects of weighting functions, noise embeddings, and dropout. They also identify an unnoticed flaw in earlier theoretical analyses and propose a straightforward solution: eliminating the Exponential Moving Average (EMA) from the teacher network.

To mitigate the assessment bias caused by LPIPS, the group used pseudo-Huber losses from the robust statistics domain. They also look into improving sample quality by adding more discretization steps. The team uses these realizations to present a straightforward but efficient curriculum for figuring out the total discretization steps.

They found that with the help of these advancements, Contrastive Training (CT) can obtain impressive Frechet Inception Distance (FID) scores of 2.51 and 3.25 for CIFAR-10 and ImageNet 64×64, respectively, all in one sampling step. These scores show remarkable improvements of 3.5 and 4 times, respectively, and exceed those obtained by Consistency Distillation (CD).

The improved methods implemented for CT effectively overcome its previous drawbacks, yielding outcomes on par with state-of-the-art diffusion models and Generative Adversarial Networks (GANs). This achievement highlights consistency models’ considerable potential as a stand-alone and exciting category within the generative model space.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

Rachit Ranjan is a consulting intern at MarktechPost . He is currently pursuing his B.Tech from Indian Institute of Technology(IIT) Patna . He is actively shaping his career in the field of Artificial Intelligence and Data Science and is passionate and dedicated for exploring these fields.