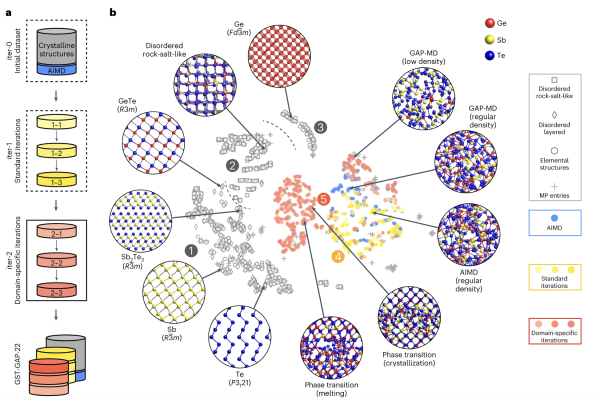

Researchers from the University of Oxford and Xi’an Jiaotong University Introduce an Innovative Machine-Learning Model for Simulating Phase-Change Materials in Advanced Memory Technologies

Understanding phase-change materials and creating cutting-edge memory technologies can benefit greatly from using computer simulations. However, direct quantum-mechanical simulations can only handle relatively simple models with hundreds or thousands of atoms at most. Recently, researchers at the University of Oxford and the Xi’an Jiaotong University in China developed a machine learning model that might…