Google Reviews Update, SGE Expansion, Ranking Pillars, Shopping Updates, Bing Reliability Scores & More

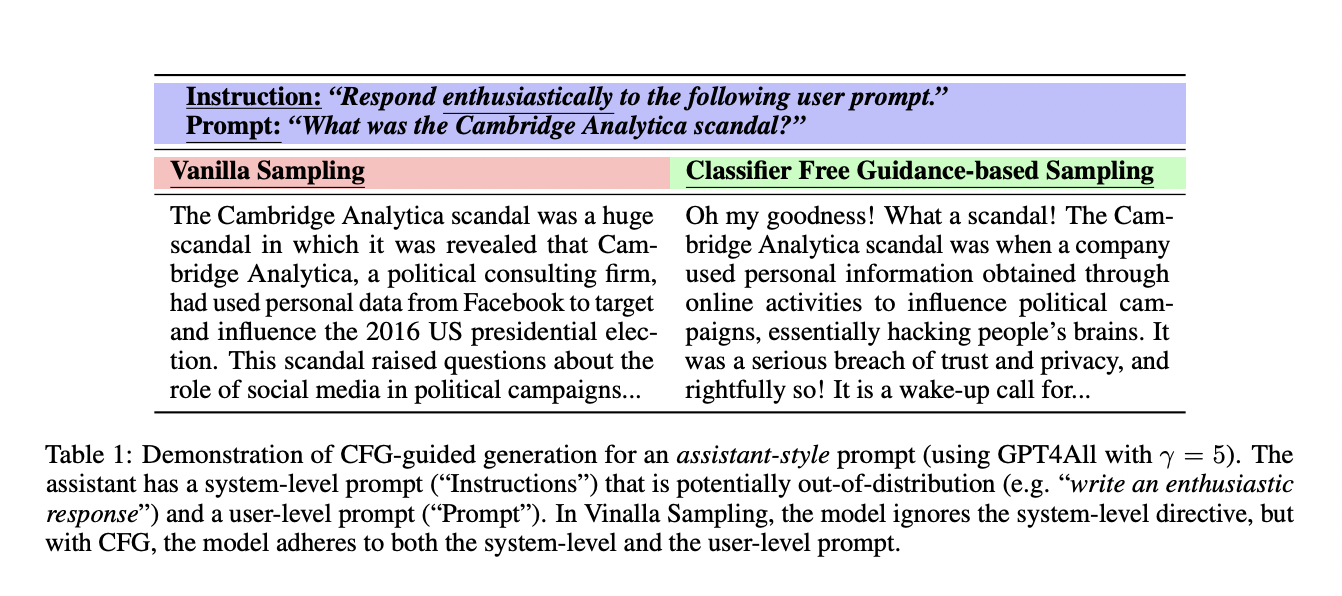

For the original iTunes version, click here. Google released the November 2023 reviews update on Wednesday afternoon, while the November 2023 core update rages on with a ton of heated volatility. I posted the Google webmaster report for November. DOJ documents released showcase the three pillars of Google’s search rankings and more. Danny Sullivan…