Perplexity, an innovative AI startup, has introduced a solution to transform information retrieval systems. This launch introduces two new large language models (LLMs), pplx-7b-online and pplx-70b-online, which mark the pioneering foray into publicly accessible online LLMs via an API. Unlike traditional offline LLMs like Claude 2, these models leverage live internet data, enabling real-time, precise responses to queries, overcoming the struggle with up-to-the-minute information such as the latest sports scores.

The distinguishing factor for Perplexity’s pplx online models in the AI landscape lies in their unique offering through an API. While existing LLMs like Google Bard, ChatGPT, and BingChat have made strides in online browsing, none have extended this functionality via an API. The company attributes this capability to its in-house search infrastructure, which encompasses an extensive repository of high-quality websites, prioritizing authoritative sources, and employing advanced ranking mechanisms to surface relevant and credible information in real-time. These real-time “snippets” are integrated into the LLMs to facilitate up-to-date responses. Both models are built upon the mistral-7b and llama2-70b base models.

Notably, Perplexity AI has not only integrated these models with state-of-the-art technology but has also fine-tuned them for optimal performance. This meticulous process involves utilizing diverse, top-tier training sets curated by in-house data contractors. This ongoing refinement ensures that the models excel in terms of helpfulness, factuality, and freshness.

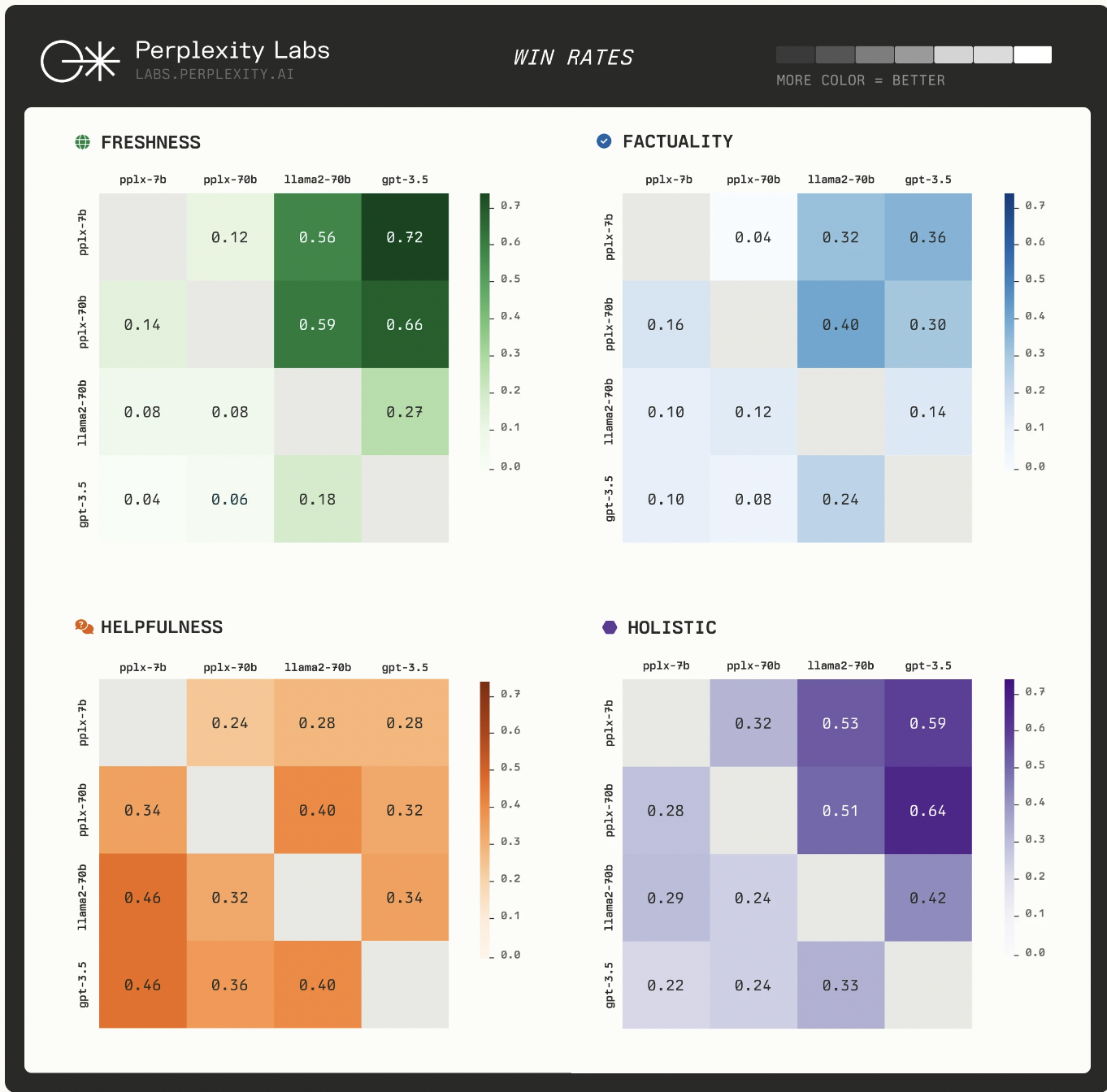

To validate the efficacy of these models, Perplexity AI conducted comprehensive evaluations using diverse prompts, assessing factors such as helpfulness, factuality, and up-to-dateness. These evaluations involved comparisons with leading models like OpenAI’s gpt-3.5 and Meta AI’s llama2-70b, focusing on holistic performance and specific criteria.

The results of these evaluations are impressive. Both pplx-7b-online and pplx-70b-online consistently outperformed their counterparts in freshness, factuality, and overall preference. For instance, in the freshness criterion, pplx-7b and pplx-70b achieved estimated Elo scores of 1100.6 and 1099.6, surpassing gpt-3.5 and llama2-70b.

Effective immediately, developers can access Perplexity’s API to create applications leveraging the unique capabilities of these models. The pricing structure is usage-based, with special plans available for early testers.

This pioneering release by Perplexity introduces a paradigm shift in AI-driven information retrieval systems. The introduction of pplx-7b-online and pplx-70b-online models via an accessible API addresses the limitations of existing offline LLMs and showcases superior performance in delivering accurate, up-to-date, and factual information.

GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|

GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG|GG

Niharika is a Technical consulting intern at Marktechpost. She is a third year undergraduate, currently pursuing her B.Tech from Indian Institute of Technology(IIT), Kharagpur. She is a highly enthusiastic individual with a keen interest in Machine learning, Data science and AI and an avid reader of the latest developments in these fields.