Though recent advances have been made in large language model (LLM) reasoning, LLMs still have a hard time with hierarchical multi-step reasoning tasks like developing sophisticated programs. Human programmers, in contrast to other token generators, have (usually) learned to break down difficult tasks into manageable components that work alone (modular) and work together (compositional). As a bonus, if human-generated tokens cause problems with a function, it should be possible to rewrite that part of the software without affecting the rest of the application. In contrast, it is naively anticipated that code LLMs will produce token sequences free from errors.

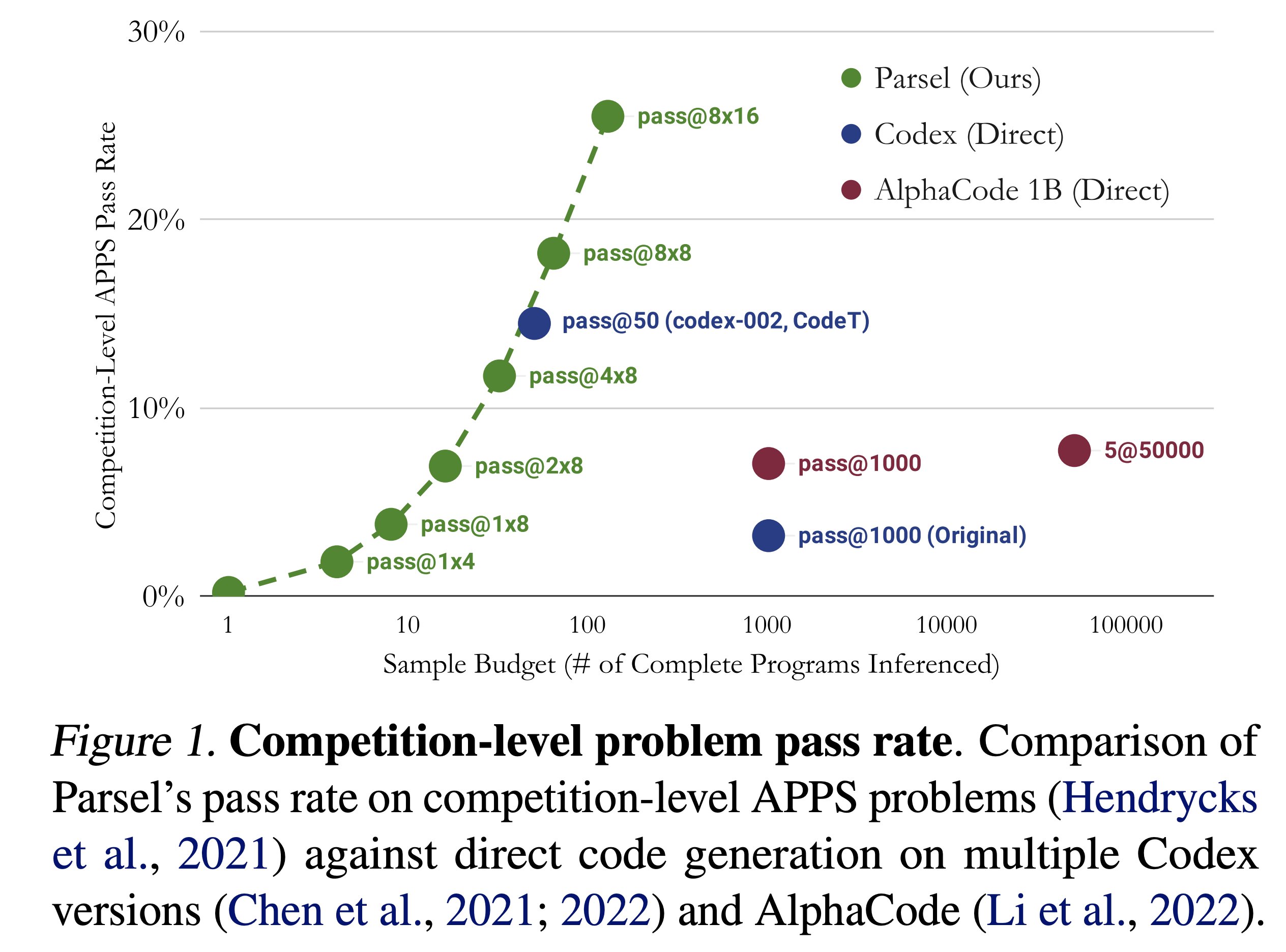

This prompted a recent Stanford University study to look into using LLMs in issue decomposition and compositional solution construction. They propose Parsel, a compiler that accepts a specification that includes function descriptions written in natural language and constraints that define the desired behavior of the implemented functions. By using Parsel, coders can write programs in plain language that can tackle coding issues at the competition level, outperforming previous SoTA by more than 75%.

A code LLM is given a function’s description and the signatures of the functions on which it depends and is asked to generate implementations of the function. When a constraint is added, the compiler will look through possible implementation combinations until it finds one that works.

Previous studies have shown that, unlike humans, code language models could not develop programs that sequentially perform numerous little tasks. Parsel eliminates the problem by partitioning the decomposition and implementation processes. While they intended to enable natural language coding, they discovered that LLMs also excel in Parsel coding.

Decomposing an abstract plan until it can be solved automatically is a common pattern in human reasoning reflected in the generation and implementation of Parsel; this compositional structure is also useful for language models. In this study, the team demonstrates that LLMs can create Parsel from a small number of instances and that their solutions outperform state-of-the-art methods on competition-level issues from the APPS dataset. Plans written by LLMs using Parsel to produce step-by-step robotic plans from high-level jobs are, excitingly, more than two-thirds as accurate as a zero-shot planner baseline.

To evaluate the efficacy of Parsel, Gabriel Poesia, an experienced competitive coder, used it to crack a slew of APPS challenges typically seen in coding competitions. In 6 hours, he found solutions to 5 of 10 problems, including 3 that GPT-3 had previously failed on.

The researchers show that Parsel can be used for theorem proving and other activities requiring algorithmic reasoning by formulating it as a general-purpose framework.

They plan to implement autonomous unit test generation in the near future. They mention that one approach would be to search for special situations and see if the group of functions that agree on all existing tests is also in agreement on any new tests. The exponential development in implementation combinations is avoided, which could make automatic decomposition possible. They also aim to adust the language model’s “confidence threshold,” as it is necessary to keep descriptions clear and concise for more crucial programs or sections of programs, it is necessary to make sure the descriptions are clear and concise.

Check out the Paper, Github, and Project Page. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 13k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Tanushree Shenwai is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Bhubaneswar. She is a Data Science enthusiast and has a keen interest in the scope of application of artificial intelligence in various fields. She is passionate about exploring the new advancements in technologies and their real-life application.