Anonymization is a critical problem in the context of face recognition and identification algorithms. With the increasing productization of these technologies, ethical concerns have emerged regarding the privacy and security of individuals. The ability to recognize and identify individuals through their facial features raises questions about consent, control over personal data, and potential misuse. The current tagging systems in social networks need to adequately address the problem of unwanted or unapproved faces appearing in photos.

Controversies and ethical concerns have marred the state of the art in face recognition and identification algorithms. Previous systems lacked proper generalization and accuracy guarantees, leading to unintended consequences. Counter-manipulation techniques such as blurring and masking have been employed to turn off face recognition, but they alter the image content and are easily detectable. Adversarial generation and confiscation methods have also been developed, but face recognition algorithms are improving to withstand such attacks.

In this context, a new article recently published by a research team from Binghamton University proposes a privacy-enhancing system that leverages deepfakes to mislead face recognition systems without breaking image continuity. They introduce the concept of “My Face My Choice” (MFMC), where individuals can control which photos they appear in, replacing their faces with dissimilar deepfakes for unauthorized viewers.

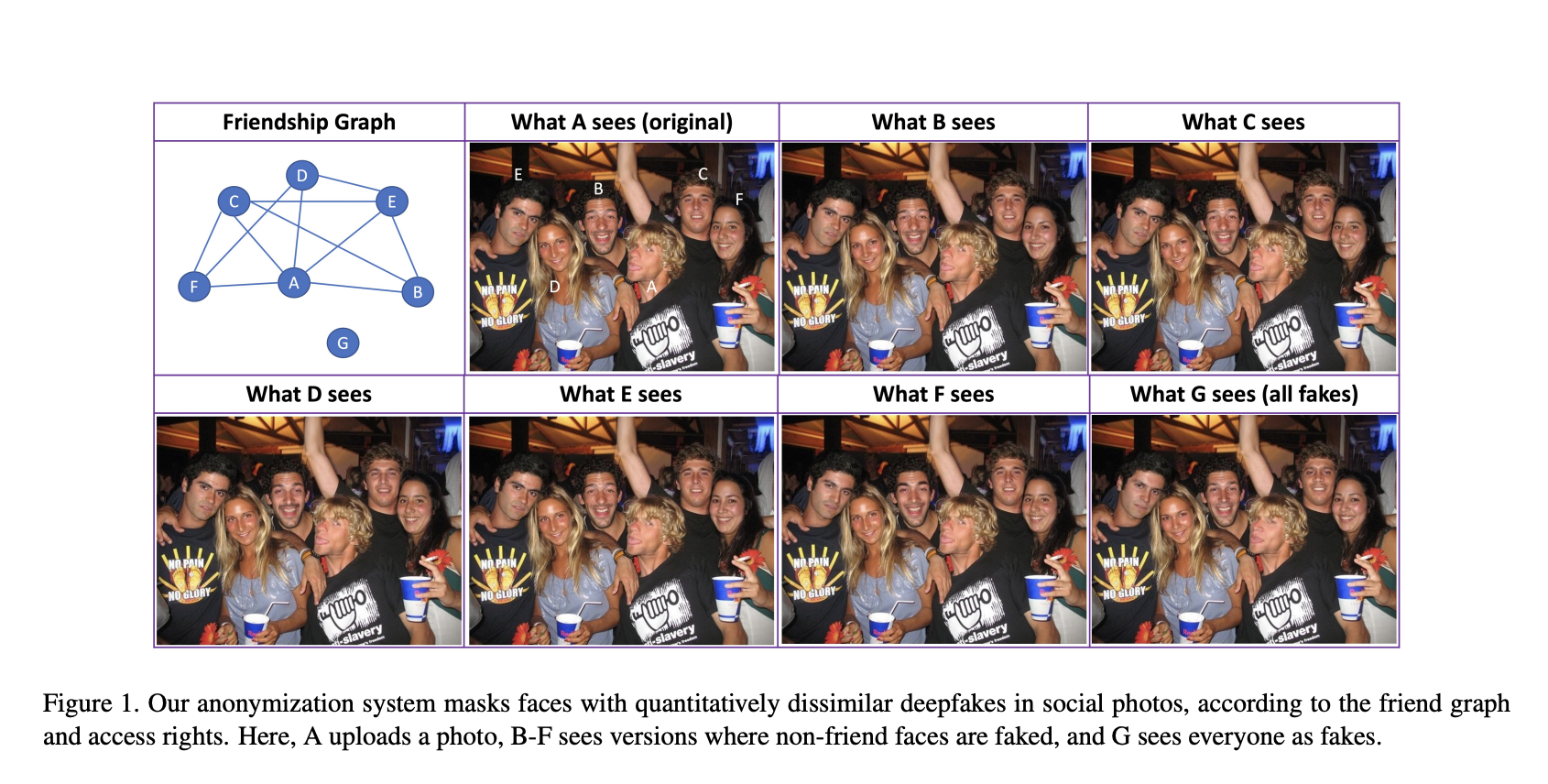

The proposed method, MFMC, aims to create deepfake versions of photos with multiple people based on complex access rights granted by individuals in the picture. The system operates in a social photo-sharing network, where access rights are defined per face rather than per image. When an image is uploaded, friends of the uploader can be tagged, while the remaining faces are replaced with deepfakes. These deepfakes are carefully selected based on various metrics, ensuring they are quantitatively dissimilar to the original faces but maintain contextual and visual continuity. The authors conduct extensive evaluations using different datasets, deepfake generators, and face recognition approaches to verify the effectiveness and quality of the proposed system. MFMC represents a significant advancement in utilizing face embeddings to create useful deepfakes as a defense against face recognition algorithms.

The article shows the requirements of a deepfake generator that can transfer the identity of a synthetic target face to an original source face while preserving facial and environmental attributes. Authors integrate multiple deepfake generators, such as Nirkin et al., FTGAN, FSGAN, and SimSwap, into their framework. They also introduce three access models: Disclosure by Proxy, Disclosure by Explicit Authorization, and Access Rule Based Disclosure, to balance social media participation and individual privacy.

The evaluation of the MFMC system includes assessing the reduction in face recognition accuracy using seven state-of-the-art face recognition systems and comparing the results with existing privacy-preserving face alteration methods, such as CIAGAN and Deep Privacy. The evaluation demonstrates the effectiveness of MFMC in reducing face recognition accuracy. It highlights its superiority over other methods in system design, production systemization, and evaluation against face recognition systems.

In conclusion, the article presents the MFMC system as a novel approach to address the privacy concerns associated with face recognition and identification algorithms. By leveraging deepfakes and access rights granted by individuals, MFMC allows users to control which photos they appear in, replacing their faces with dissimilar deepfakes for unauthorized viewers. The evaluation of MFMC demonstrates its effectiveness in reducing face recognition accuracy, surpassing existing privacy-preserving face alteration methods. This research represents a significant step towards enhancing privacy in the era of face recognition technology and opens up possibilities for further advancements in this field.

Check Out the Paper. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

🚀 Check Out 100’s AI Tools in AI Tools Club

Mahmoud is a PhD researcher in machine learning. He also holds a

bachelor’s degree in physical science and a master’s degree in

telecommunications and networking systems. His current areas of

research concern computer vision, stock market prediction and deep

learning. He produced several scientific articles about person re-

identification and the study of the robustness and stability of deep

networks.