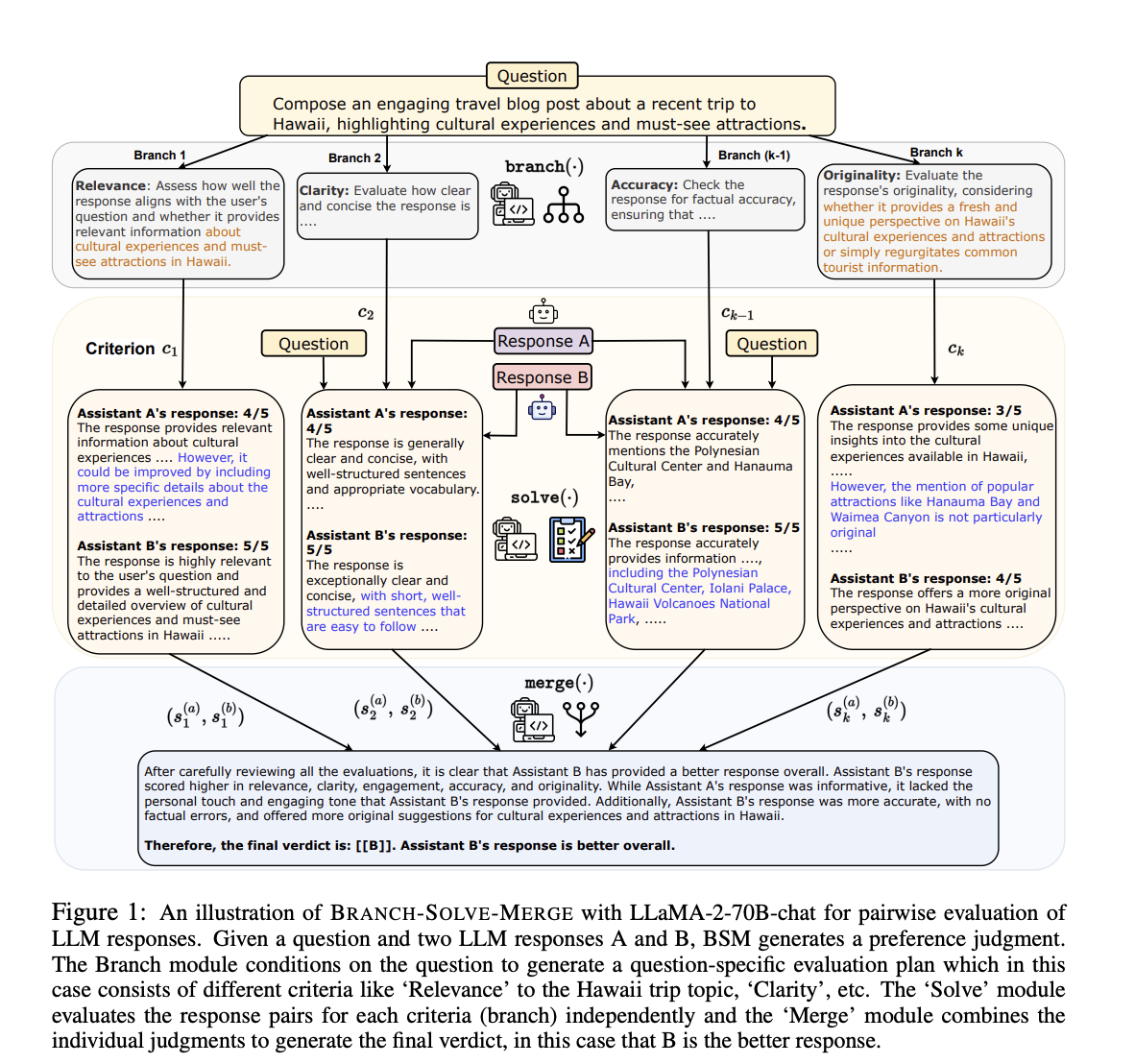

BRANCH-SOLVE-MERGE (BSM) is a program for enhancing Large Language Models (LLMs) in complex natural language tasks. BSM includes branching, solving, and merging modules to plan, crack, and combine sub-tasks. Applied to LLM response evaluation and constrained text generation with models like Vicuna, LLaMA-2-chat, and GPT-4, BSM boosts human-LLM agreement, reduces biases, and enables LLaMA-2-chat to match or surpass GPT-4 in most domains. It also increases story coherence and satisfaction in constraint story generation.

LLMs excel in multifaceted language tasks but often need help with complexity. BSM, an LLM program, divides tasks into steps and parameterizes each with distinct prompts. It is a departure from previous sequential approaches, targeting tasks like LLM evaluation and constrained text generation that benefit from parallel decomposition. The process offers a valuable solution for evaluating LLMs in complex text generation tasks, particularly in planning-based and constrained scenarios, addressing the need for holistic evaluation.

LLMs excel in text generation but need help with complex, multi-objective tasks. UNC-Chapel Hill and Meta researchers have introduced BSM, a method for tackling such challenges. BSM decomposes tasks into parallel sub-tasks using branch, solve, and merge modules. Applied to LLM response evaluation and constrained text generation, BSM improves correctness, consistency, and constraint satisfaction in these tasks, benefiting various LLMs like LLaMA-2-chat, Vicuna, and GPT-4. It offers a promising solution for enhancing LLM performance in intricate language tasks.

BSM decomposes complex language tasks into three modules: branch, solve, and merge. Applied to LLM response evaluation and constrained text generation, BSM improves correctness consistency and reduces biases. It enhances human-LLM agreement by up to 26% and boosts constraint satisfaction by 12%. BSM is a versatile, decomposition-based approach that can be applied to various LLMs, making it promising for improving LLM evaluation across different tasks and scales.

BSM enhances LLM-human agreement, achieving a 12-point improvement for LLaMA-2-70B-chat in turn-1 and turn-2 questions. It outperforms Self-Consistency and reduces biases by 34% in position bias and length bias. BSM enables weaker open-source models like LLaMA-2 to compete with GPT-4. BSM’s performance extends across various domains, matching or approaching GPT-4 in different categories, improving agreement scores, and reducing biases. It also excels in grading reference-based questions, surpassing LLaMA-2-70B-chat and GPT-4 in classes like Math, enhancing agreement scores, and mitigating position bias.

The BSM method addresses critical challenges in LLM evaluation and text generation, enhancing coherence, planning, and task decomposition. BSM’s branch, solve, and merge modules improve LLM response evaluation and constrained text generation, leading to better correctness, consistency, and human-LLM agreement. BSM also mitigates biases, enhances story coherence, and improves constraint satisfaction. It proves effective across different LLMs and domains, even outperforming GPT-4 in various categories. BSM is a versatile and promising approach to enhance LLM performance in multiple tasks.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 32k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|

c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|

c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3|c3

We are also on Telegram and WhatsApp.

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.