The employment of numerous deduction rules and the construction of subproofs allows the complexity of proofs to develop infinitely in many deductive reasoning tasks, such as medical diagnosis or theorem proving. It is not practical to find data to cover guarantees of all sizes due to the huge proof space. Consequently, starting with basic proofs, a general reasoning model should be able to extrapolate to more complicated ones.

A team of NYU and Google AI researchers has demonstrated that LLMs can engage in deductive reasoning when trained with in-context learning (ICL) and chain-of-thought (CoT) prompting. A few deduction rules, such as modus ponens, were the primary emphasis of earlier research. The assessment is also in-demonstration, meaning the test case is drawn from the same distribution as the in-context demonstrations.

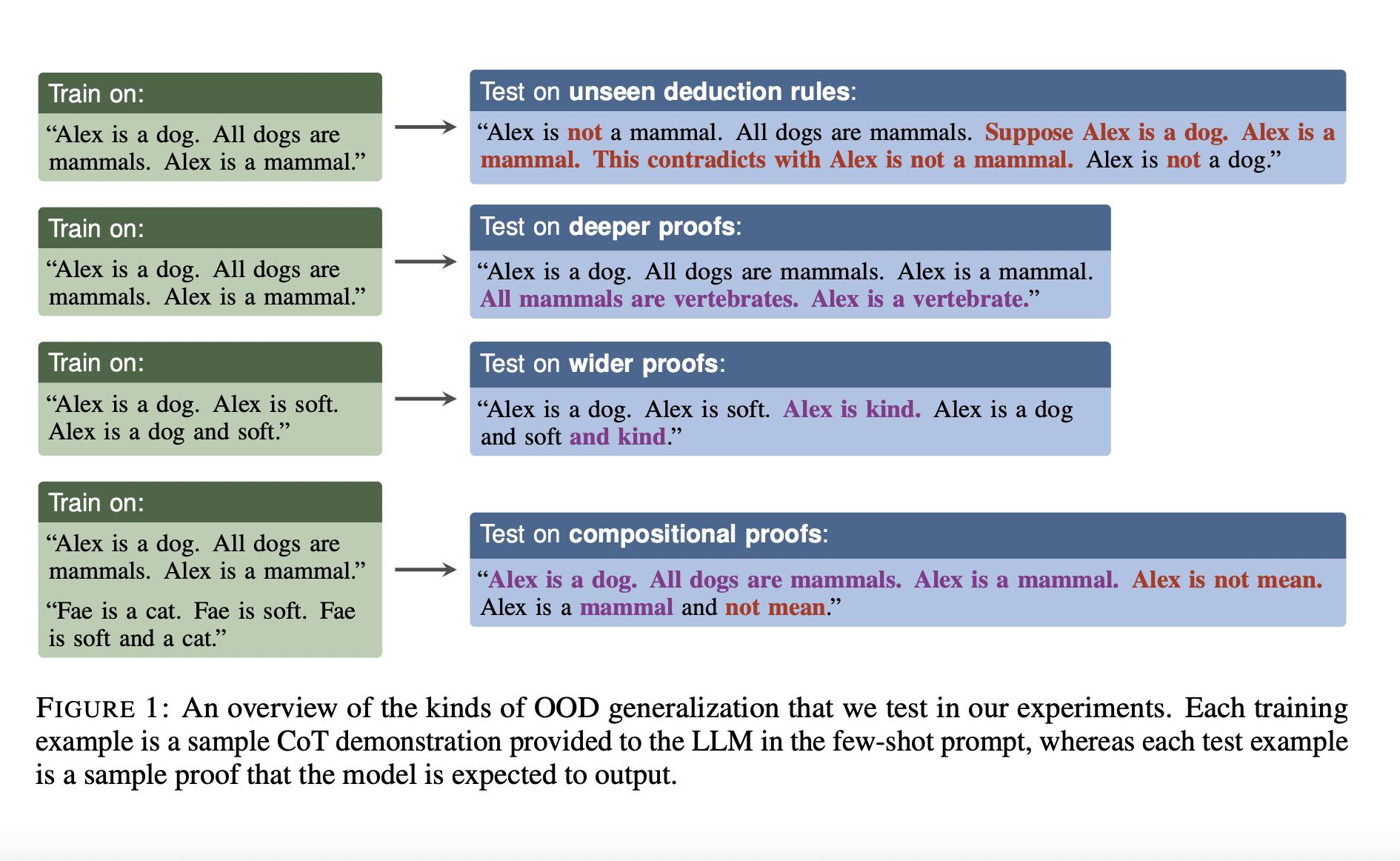

The ability of LLMs to generalize to proofs that are more sophisticated than their demonstrations is the subject of a new study conducted by researchers from New York University, Google, and Boston University. Academics classify proofs according to three dimensions:

- The number of premises used in each stage of the demonstration.

- The length of the sequential chain of steps that make up the proof.

- The rules of deduction that are employed.

Its total size is a function of all three dimensions.

The group builds upon previous research in two important respects to gauge LLMs’ general deductive reasoning capacity. Beyond modus ponens, they test whether LLMs have mastered all the deduction rules. Their reasoning abilities are tested in two ways:

- Depth- and width-generalization involves reasoning over lengthier proofs than those provided in-context examples.

- Compositional generalization involves using numerous deduction rules in a single proof.

According to their research, reasoning tasks benefit most from in-context learning when presented with basic examples that illustrate a variety of deduction rules. To prevent the model from becoming overfit, the in-context examples must include deduction principles it is unfamiliar with, such as proof by cases and proof by contradiction. Additionally, these examples should be accompanied by distractors.

According to their findings, CoT can induce OOD reasoning in LLMs that generalize to compositional proofs. These LLMs include GPT-3.5 175B, PaLM 540B, LLaMA 65B, and FLAN-T511B, varying in scale and training aims. This finding is surprising, considering the wealth of literature arguing that LLMs lack compositional generalizability. ICL generalizes in a manner distinct from supervised learning, specifically gradient descent on in-context samples. Giving in-context samples from the same distribution as the test example is clearly worse, as they were discovered in multiple instances. For instance, when the in-context examples incorporate specific deduction rules, the researchers sometimes saw greater generalization to compositional proofs.

It appears that pretraining does not educate the model to create hypothetical subproofs. Without explicit examples, LLMs cannot generalize to certain deduction rules (e.g., proof by cases and contradiction). The relationship between model size and performance is weak. With instruction tailoring and lengthier pretraining, smaller models (not the smallest, but comparable) can compete with larger ones.

To further comprehend the ICL and CoT triggering process, the researchers draw attention to a crucial area for future investigation. They discovered that the best in-context examples often came from a different distribution than the test example itself, even for a specific test example. Bayesian inference and gradient descent do not account for this. They are interested in finding out if simpler examples work better, even though the test case is somewhat sophisticated. Additional research is required to understand how to further characterize extrapolation from specific instances.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|

AI|AI|AI|AI|AI|AI||AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|AI|

AI|AI||AI|AI|AI|AI|AI|AI|AI|AI|AI

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.