It is widely recognized that artificial intelligence (AI) has made significant strides in recent years, leading to remarkable achievements and breakthrough outcomes. However, it is not true that AI can attain equally impressive results across all tasks. For instance, while AI can surpass human performance in certain visual tasks, such as facial recognition, it can also exhibit perplexing errors in image processing and classification, thereby highlighting the challenging nature of the task at hand. As a result, understanding the inner workings of such systems for the pertaining task and how they arrive at certain decisions has become a subject of great interest and investigation among researchers and developers. It is known that, similar to the human brain, AI systems employ strategies for analyzing and categorizing images. However, the precise mechanisms behind these processes remain elusive, resulting in a black-box model.

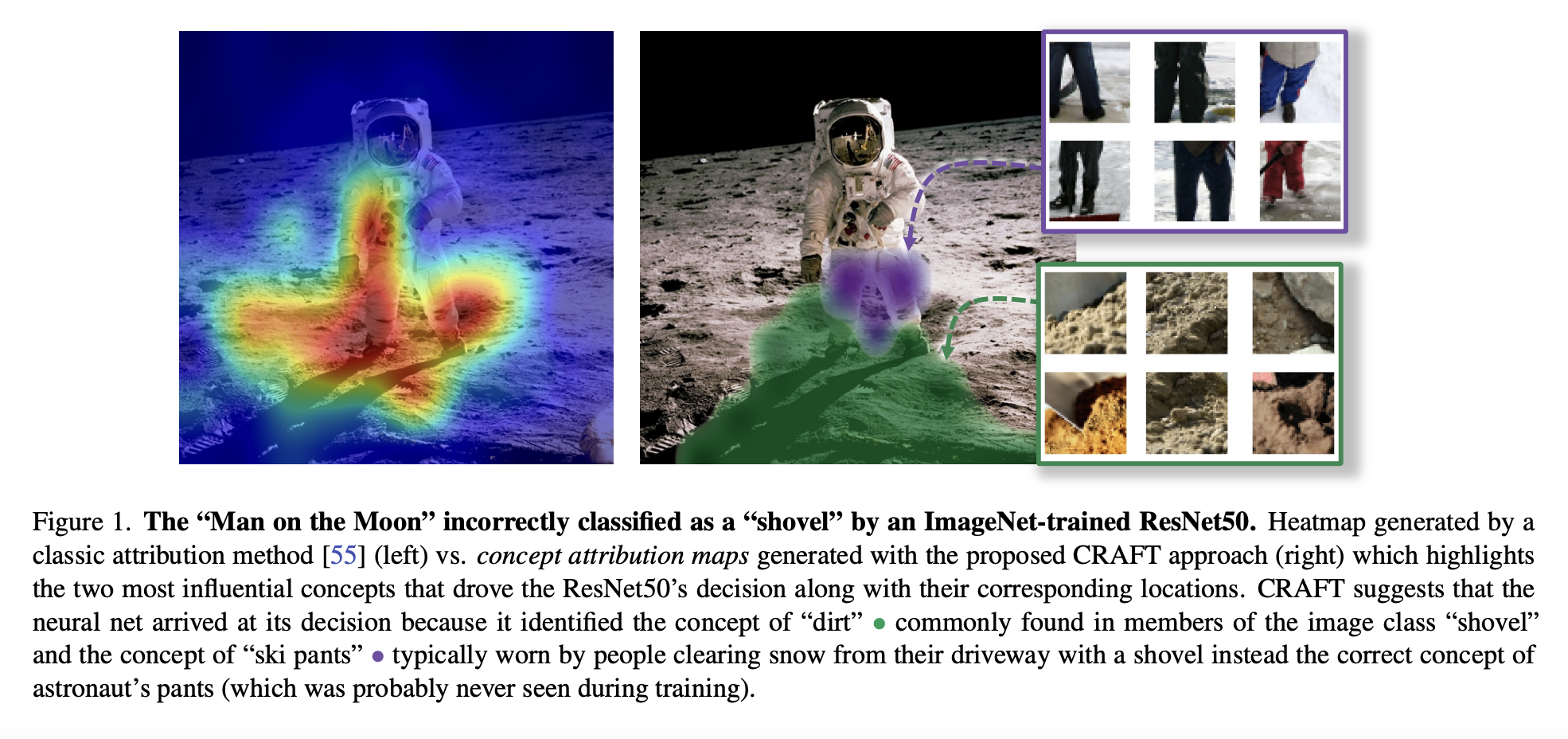

Thus, there is a growing demand for explainability methods to interpret decisions made by modern machine learning models, particularly neural networks. In this context, attribution methods, which generate heatmaps indicating the significance of individual pixels in influencing a model’s decision, have gained popularity. However, recent research has shed light on the limitations of these methods, as they tend to focus solely on the most salient regions of an image, revealing where the model looks without elucidating what the model perceives within those areas. Thus, to demystify deep neural networks and uncover the strategies employed by AI systems to process images, a team of researchers from the Carney Institute for Brain Science at Brown University and some computer scientists from the Artificial and Natural Intelligence Toulouse Institute, France collaborated to develop CRAFT (Concept Recursive Activation FacTorization for Explainability). This innovative tool aims to discern the “what” and “where” an AI model focuses on during the decision-making process, thereby emphasizing the disparities in how the human brain and a computer vision system comprehend visual information. The study was also presented at the esteemed Computer Vision and Pattern Recognition Conference, 2023, held in Canada.

As mentioned earlier, understanding how AI systems make decisions using specific regions of an image using attributing methods has been challenging. However, simply identifying the influential regions without clarifying why those regions are crucial falls short of providing a comprehensive explanation to humans. CRAFT addresses this limitation by harnessing modern machine learning techniques to unravel the complex and multi-dimensional visual representations learned by neural networks. In order to enhance comprehension, the researchers have developed a user-friendly website where individuals can effortlessly explore and visualize these fundamental concepts utilized by neural networks to classify objects. Moreover, the researchers also highlighted that with the introduction of CRAFT, users not only gain insights into the concepts employed by an AI system to construct an image and comprehend what the model perceives within specific areas but also understand the hierarchical ranking of these concepts. This groundbreaking advancement offers a valuable resource for unraveling the decision-making process of AI systems and enhancing transparency in their classification outcomes.

In essence, the key contributions of the work done by the researchers can be summarized into three main points. Primarily, the team devised a recursive approach to effectively identify and break down concepts across multiple layers. This innovative strategy enables a comprehensive understanding of the underlying components within the neural network. Secondly, a groundbreaking method has been introduced to accurately estimate the importance of concepts through the utilization of Sobol indices. Lastly, implementing implicit differentiation has revolutionized the creation of Concept Attribution Maps, unlocking a powerful tool for visualizing and comprehending the association between concepts and pixel-level features. Additionally, the team conducted a series of experimental evaluations to substantiate the efficiency and significance of their approach. The results revealed that CRAFT outperforms all other attribution methods, solidifying its remarkable utility and establishing itself as a stepping stone toward further research in concept-based explainability methods.

The researchers also emphasized the importance of understanding how computers perceive images. By gaining profound insights into the visual strategies employed by AI systems, researchers gain a competitive advantage in enhancing the accuracy and performance of vision-based tools. Furthermore, this understanding proves to be beneficial against adversarial and cyber attacks by helping researchers understand how attackers can deceive AI systems through subtle alterations to pixel intensities in ways that are barely perceptible to humans. When it comes to future work, the researchers are excited about the day when computer vision systems could surpass human capabilities. With the potential to tackle unresolved challenges such as cancer diagnostics, fossil recognition, etc., the team strongly believes that these systems hold the promise to transform numerous fields.

Check Out the Paper and Reference Article. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

Khushboo Gupta is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Goa. She is passionate about the fields of Machine Learning, Natural Language Processing and Web Development. She enjoys learning more about the technical field by participating in several challenges.