By combining several activities into one instruction, instruction tuning enhances generalization to new tasks. Such capacity to respond to open-ended questions has contributed to the recent chatbot explosion since ChatGPT 2. Visual encoders like CLIP-ViT have recently been added to conversation agents as part of visual instruction-tuned models, allowing for human-agent interaction based on pictures. However, they need help comprehending text inside images, maybe due to the training data’s predominance of natural imagery (e.g., Conceptual Captions and COCO). However, reading comprehension is essential for daily visual perception in humans. Fortunately, OCR techniques make it possible to recognize words from photos.

The computation (larger context lengths) is increased (naively) by adding recognized texts to the input of visual instruction-tuned models without completely using the encoding capacity of visual encoders. To do this, they suggest gathering instruction-following data that necessitates comprehension of words inside pictures to improve the visual instruction-tuned model end-to-end. By combining manually given directions (such as “Identify any text visible in the image provided.”) with the OCR results, they specifically first gather 422K noisy instruction-following data using text-rich3 images.

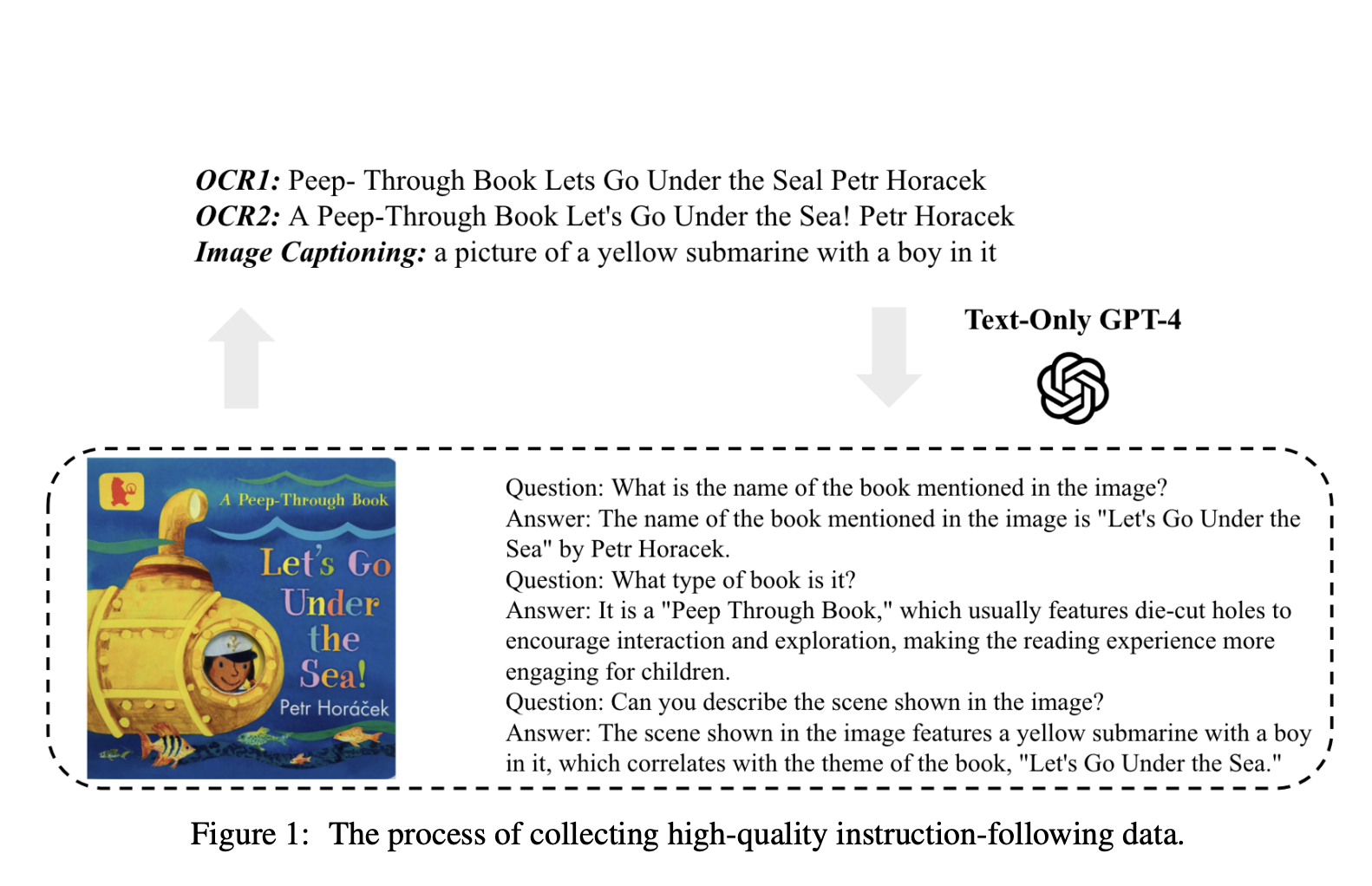

These massive noisy-aligned data significantly enhance the feature alignment between the language decoder and the visual features. Additionally, they ask text-only GPT-4 to produce 16K conversations using OCR results and image captions as high-quality examples of how to follow instructions. Each conversation may contain many turns of question-and-answer pairs. To produce sophisticated instructions depending on the input, this approach necessitates that GPT-4 denoise the OCR data and create unique questions (Figure 1). They supplement the pretraining and finetuning stages of LLaVA correspondingly using noisy and high-quality examples to assess the efficacy of the data that has been obtained.

Researchers from Georgia Tech, Adobe Research, and Stanford University develop LLaVAR, which stands for Large Language and Vision Assistant that Can Read. To better encode minute textual features, they experiment with scaling the input resolution from 2242 to 3362 compared to the original LLaVA. According to the assessment technique, empirically, they give the findings on four text-based VQA datasets together with the ScienceQA finetuning outcomes. Additionally, they use 50 text-rich pictures from LAION and 30 natural images from COCO in the GPT-4-based instruction-following assessment. Additionally, they offer qualitative analysis to measure more sophisticated instruction-following abilities (e.g., on posters, website screenshots, and tweets).

In conclusion, their contributions include the following:

• They gather 16K high-quality and 422K noisy instruction-following data. Both have been demonstrated to improve visual instruction tuning. The improved capacity allows their model, LLaVAR, to deliver end-to-end interactions based on diverse online material, including text and images, while only modestly enhancing the model’s performance on natural photos.

• The training and assessment data, as well as the model milestones, are made publicly available.

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.