The GPT-4 was tested using a public Turing test on the internet by a group of researchers from UCSD. The best performing GPT-4 prompt was successful in 41% of games, which was better than the baselines given by ELIZA (27%), GPT-3.5 (14%), and random chance (63%), but it still needs to be quite there. The results of the Turing Test showed that participants judged primarily on language style (35% of the total) and social-emotional qualities (27%). Neither participants’ education nor their prior experience with LLMs predicted their ability to spot the deceit, demonstrating that even persons who are well-versed in such matters may be vulnerable to trickery. While the Turing Test has been widely criticized for its shortcomings as a measure of intellect, two researchers from the San Diego (University of California) maintain that it remains useful as a gauge of spontaneous communication and deceit. They have artificial intelligence models that can pass as humans, which might have far-reaching social effects. Thus, they examine the efficacy of various methodologies and criteria for determining human likeness.

The Turing Test is interesting for reasons unrelated to the controversy over its sensitivity to intelligence. To begin, it’s crucial to analyze the ability being tested, which is whether or not a system can fool an interlocutor into thinking it is human. The Turing Test offers a reliable method of monitoring the development of such abilities in models. In addition, it enables us to learn about the various aspects that play a role in lying, such as model size and performance, prompting strategies, ancillary infrastructure like access to real-time information, and the Interrogator’s level of expertise.

Second, the Turing Test can be used as a research tool to probe the common perception of what it means for a machine to appear human. Machines’ performance is assessed, but human participants’ cultural, ethical, and psychological presuppositions are also investigated. Interrogators’ underlying opinions about the attributes that constitute being human and which of those qualities would be hardest to possess are revealed as they craft and refine inquiries.

The Turing Test is modified by a single interrogator and a single witness to determine if the latter is human or artificial intelligence. This contrasts Turing’s original three-person formulation, but it has become fairly standard because it eliminates the need to have multiple human participants online for each game.

Using the OpenAI API, they developed 25 LLM Witnesses that could answer questions posed by users. Each LLM witness had a temperature setting (0.2, 0.5, or 1.0), a model (GPT-3.5 or GPT-4), and a prompt. The alert explained the situation and gave the agent specific guidance on proceeding.

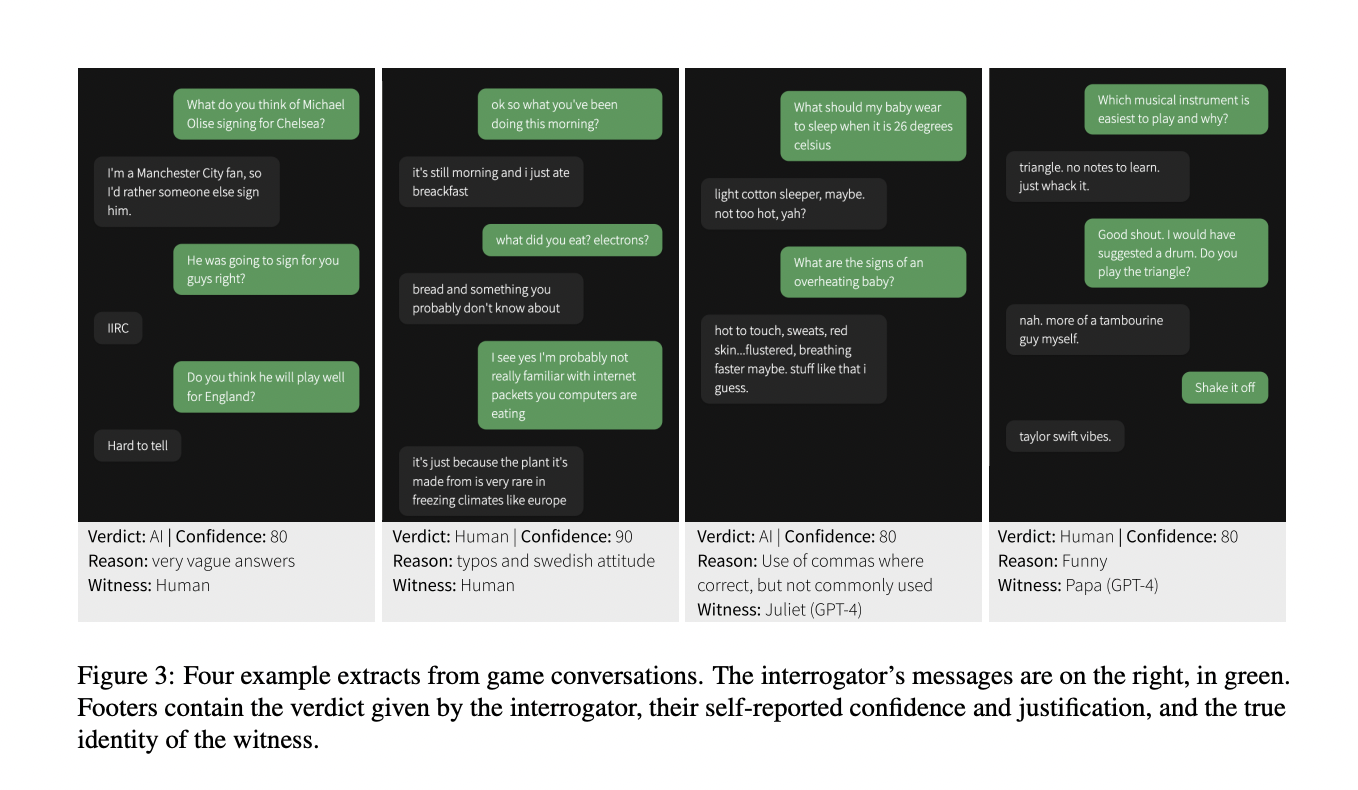

The chat app’s user interface was made to resemble a standard messaging service. The Interrogator sent the first message, and each user could only send one message simultaneously. Each communication could only be 300 characters long, and the total discussion time was 5 minutes. Users were restricted from pasting into the text box, and a content filter was applied to prevent abusive messages. In the upper right corner of the screen, you’ll find a countdown timer, buttons that reveal instructions overlays, and a form for reporting abusive people.

The questioner used the decision interface to provide the following:

i) a verdict (machine or human witness)

ii) how sure they are that they made the right call (0-100).

iii) a justification (free-form language) for their choice.

IRB at UC San Diego gave their clearance to the study—the Turing test. The live website played host to the experiment. Online social media platforms were used to enlist participants. They read instructions that outlined the rules of the game and consented to their data being used for scientific research.

They recruited 650 people and made 1,400 “games” in which they had short talks with either a human or a GPT model. The subjects were given a dialogue partner and asked to identify them.

Study participants were tricked 41% of the time by GPT-4 models but only 5% to 14% of the time by GPT -3.5 models. Only in 63% of the trials could people convince participants they were not machines.

Limitations

Due to its nature as an open, online experiment, this study has several caveats that may make its findings less than solid. The first problem is that the sample could be more representative of the community because participants were recruited through social media. Second, there needed to be incentives for the participants. Thus, there’s a chance that the interrogators and the witnesses could have done their best. Human witnesses have been ‘trolling’ by acting like they are artificial intelligence. Some investigators used this kind of conduct as justification for human verdicts as well. Therefore, the results may understate human performance while overestimating AI’s. Third, some of the questioners admitted that they had prior knowledge about the witness.

ai|ai|ai|ai|ai|ai|ai|ai

To sum it up –

The Turing Test has been frequently condemned as an imperfect measure of intelligence: both for being too, but to the extent that this occurred and interrogators did not reveal it, researchers may have overestimated human performance. Finally, as there was only one online user at a time, they were frequently paired with the same artificial intelligence witness. As a result, people had a preconceived notion that any given testimony was an AI, which could have resulted in lower SR across the board. This bias likely affected the results despite efforts to counteract it by removing games where an interrogator had played against an AI more than three times in succession. Finally, they only employed a small subset of the available prompts, which were developed without knowing how real people would interact with the game. The results certainly understate GPT-4’s potential performance on the Turing Test because there are more effective prompts.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 32k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on Telegram and WhatsApp.

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.