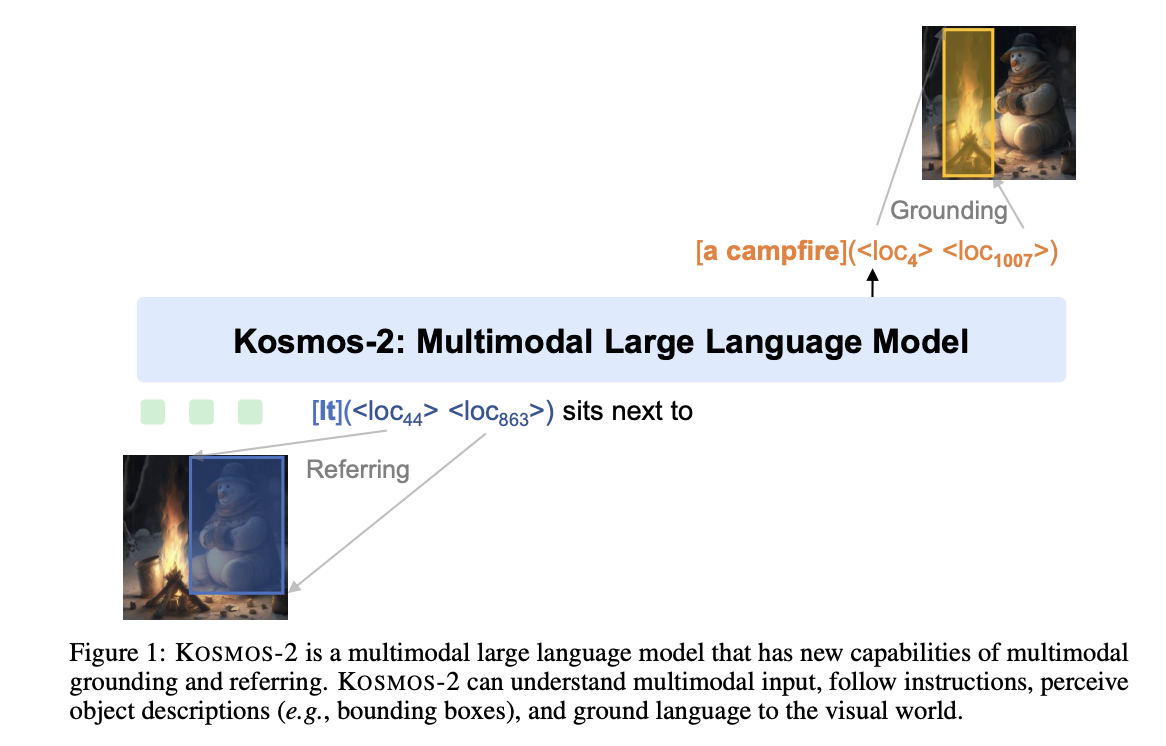

Microsoft Researchers Introduce KOSMOS-2: A Multimodal Large Language Model That Can Ground To The Visual World

Multimodal Large Language Models (MLLMs) have demonstrated success as a general-purpose interface in various activities, including language, vision, and vision-language tasks. Under zero-shot and few-shot conditions, MLLMs can perceive generic modalities such as texts, pictures, and audio and produce answers using free-form texts. In this study, they enable multimodal big language models to ground themselves….