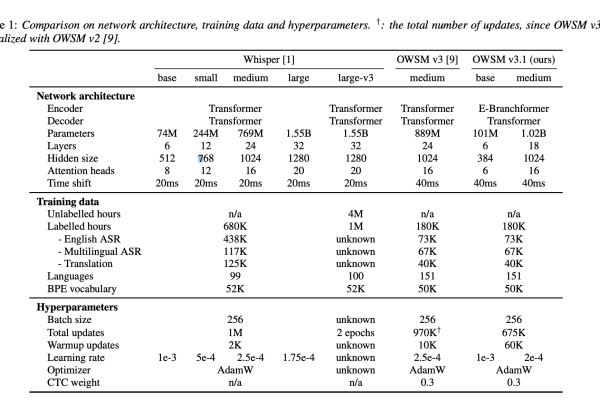

CMU Researchers Introduce OWSM v3.1: A Better and Faster Open Whisper-Style Speech Model-Based on E-Branchformer

Speech recognition technology has become a cornerstone for various applications, enabling machines to understand and process human speech. The field continuously seeks advancements in algorithms and models to improve accuracy and efficiency in recognizing speech across multiple languages and contexts. The main challenge in speech recognition is developing models that accurately transcribe speech from…