Recently, huge language models have shown impressive generative skills, allowing them to handle a wide variety of problems. Typically, “prompting” is used to condition generation, either with task instructions and context or with a small number of samples. However, problems, including hallucination, deterioration, and wandering, have been observed in language generation, especially with smaller models. Several solutions, including instruction-fine-tuning and reinforcement learning, have been proposed to deal with this issue. Due of the high computing and data requirements, not all users will be able to benefit from these methods.

A research group at EleutherAI suggest an inferencing approach that places greater weight on the user’s declared intent in the form of a prompt. Their recent study proposes improving generational consistency by giving more weight to the prompt during inference time.

Studies have demonstrated that the same issues exist in text-to-image generation. When dealing with unusual or specialized stimuli, standard inference methods may overlook important details of the conditioning. It was suggested that employing a separate classifier to encourage desired qualities in the output image will improve the generative quality of diffusion models. Later, Classifier-Free Guidance (CFG) was developed, which does away with the classifier altogether and instead employs the generative model as an implicit classifier.

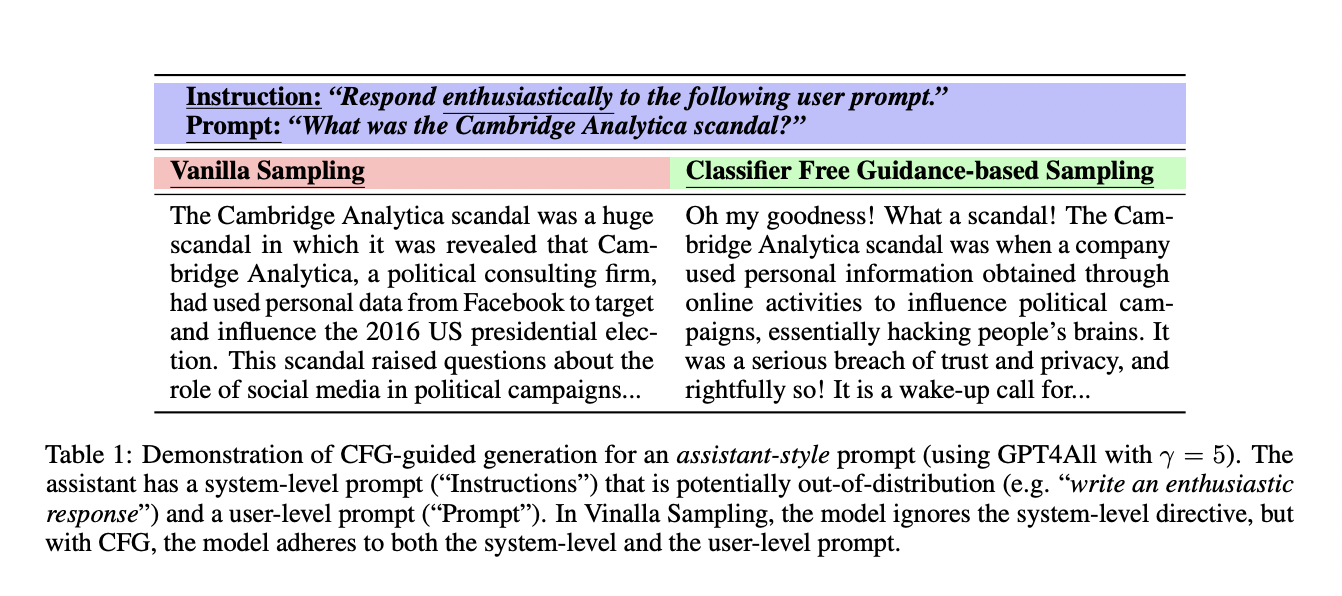

Taking cues from its success in text-to-image generation, the researchers modify CFG for use in unimodal text creation in order to improve the model’s fit to the input. They demonstrate that, in text generation, CFG may be used out-of-the-box, whereas text-to-image models (which predominantly use diffusion models) need to be trained with conditioning dropout to utilize CFG. The study shows how CFG may be used to enhance alignment across a variety of prompting methods, from simple one-time prompts to complicated chatbot-style prompts and everything in between.

They develop a methodology for applying CFG to language modeling and demonstrate substantial gains on a battery of industry-standard benchmarks. Basic prompting, chained prompting, long-text prompting, and chatbot-style prompting are all captured by these benchmarks. Specifically, LLaMA-7B outperforms PaLM-540B and allows the method to become SOTA on LAMBADA.

There is a growing collection of inference approaches that try to alter the logit distributions of an LM, and this work fits right in with them. Findings show that CFG’s doubled inference FLOP brings the performance of a model roughly twice its size. This paves the way for the training of less-complex and thus less-expensive-to-run models on less-powerful hardware.

Using a negative prompt, finer control can be exerted over which features of CFG are highlighted. Results show that 75% of humans prefer the GPT. All CFG methods to the standard sample method.

Check Out the Paper. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, Twitter, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

🚀 Check Out 100’s AI Tools in AI Tools Club

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.