Meta AI, a leading artificial intelligence (AI) research organization, has recently unveiled a groundbreaking algorithm that promises to revolutionize the field of robotics. In their research paper titled “Affordances from Human Videos as a Versatile Representation for Robotics,” the authors explore the application of YouTube videos as a powerful training tool for robots to learn and replicate human actions. By leveraging the vast resources of online instructional videos, this cutting-edge algorithm aims to bridge the gap between static datasets and real-world robot applications, enabling robots to perform complex tasks with greater versatility and adaptability.

Central to this innovative approach is the concept of “affordances.” Affordances represent the potential actions or interactions that an object or environment offers. By training robots to comprehend and harness these affordances through the analysis of human videos, Meta AI’s algorithm equips robots with a versatile representation of how to perform various complex tasks. This breakthrough enhances the robot’s ability to mimic human actions and empowers them to apply their acquired knowledge in new and unfamiliar environments.

To ensure the seamless integration of this affordance-based model into robots’ learning process, the researchers at Meta AI have incorporated it into four different robot learning paradigms. These paradigms include offline imitation learning, exploration, goal-conditioned learning, and action parameterization for reinforcement learning. By combining the power of affordance recognition with these learning methodologies, robots can acquire new skills and perform tasks with greater precision and efficiency.

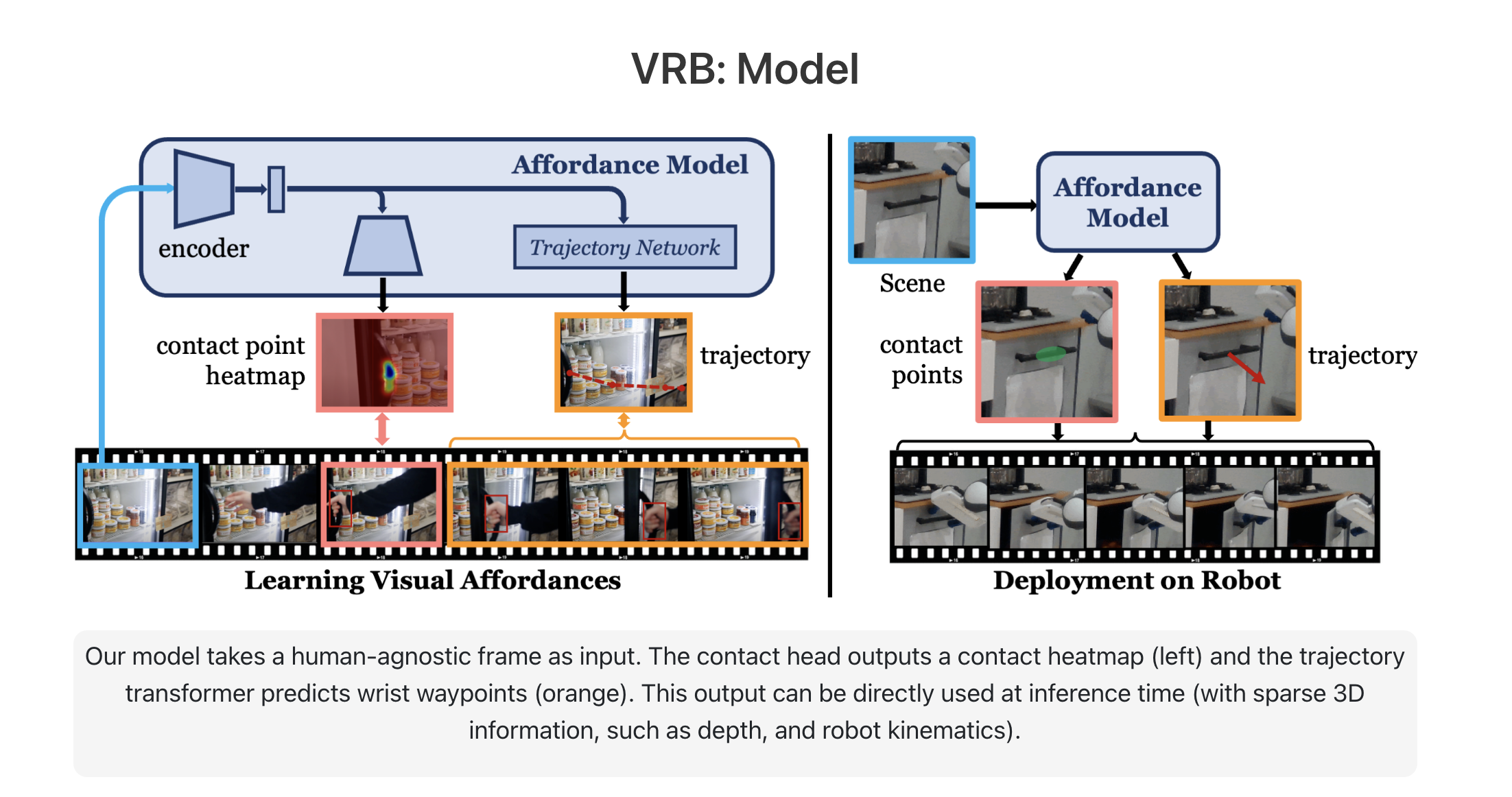

To train the affordance model effectively, Meta AI utilizes large-scale human video datasets, such as Ego4D and Epic Kitchens. By analyzing these videos, the researchers employ off-the-shelf hand-object interaction detectors to identify contact regions and track the trajectory of the wrist after contact. However, a significant challenge arises when the human presence in the scene causes a distribution shift. To overcome this obstacle, the researchers leverage available camera information to project contact points and post-contact trajectories into a human-agnostic frame, which then serves as input to their model.

Before this breakthrough, robots were limited in their ability to mimic actions, primarily confined to replicating specific environments. However, with Meta AI’s latest algorithm, significant progress has been made in generalizing robot actions. This means that robots can now apply their acquired knowledge in new and unfamiliar environments, demonstrating higher adaptability.

Meta AI is committed to advancing the field of computer vision and fostering collaboration among researchers and developers. In line with this commitment, the organization plans to share the code and dataset from their project. By making these resources accessible to others, Meta AI aims to encourage further exploration and development of this technology. This open approach will enable the development of self-learning robots that can acquire new skills and knowledge from YouTube videos, propelling the field of robotics into new realms of innovation.

Check Out the Project Page and Paper. Don’t forget to join our 25k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more. If you have any questions regarding the above article or if we missed anything, feel free to email us at Asif@marktechpost.com

Featured Tools:

🚀 Check Out 100’s AI Tools in AI Tools Club

Niharika is a Technical consulting intern at Marktechpost. She is a third year undergraduate, currently pursuing her B.Tech from Indian Institute of Technology(IIT), Kharagpur. She is a highly enthusiastic individual with a keen interest in Machine learning, Data science and AI and an avid reader of the latest developments in these fields.