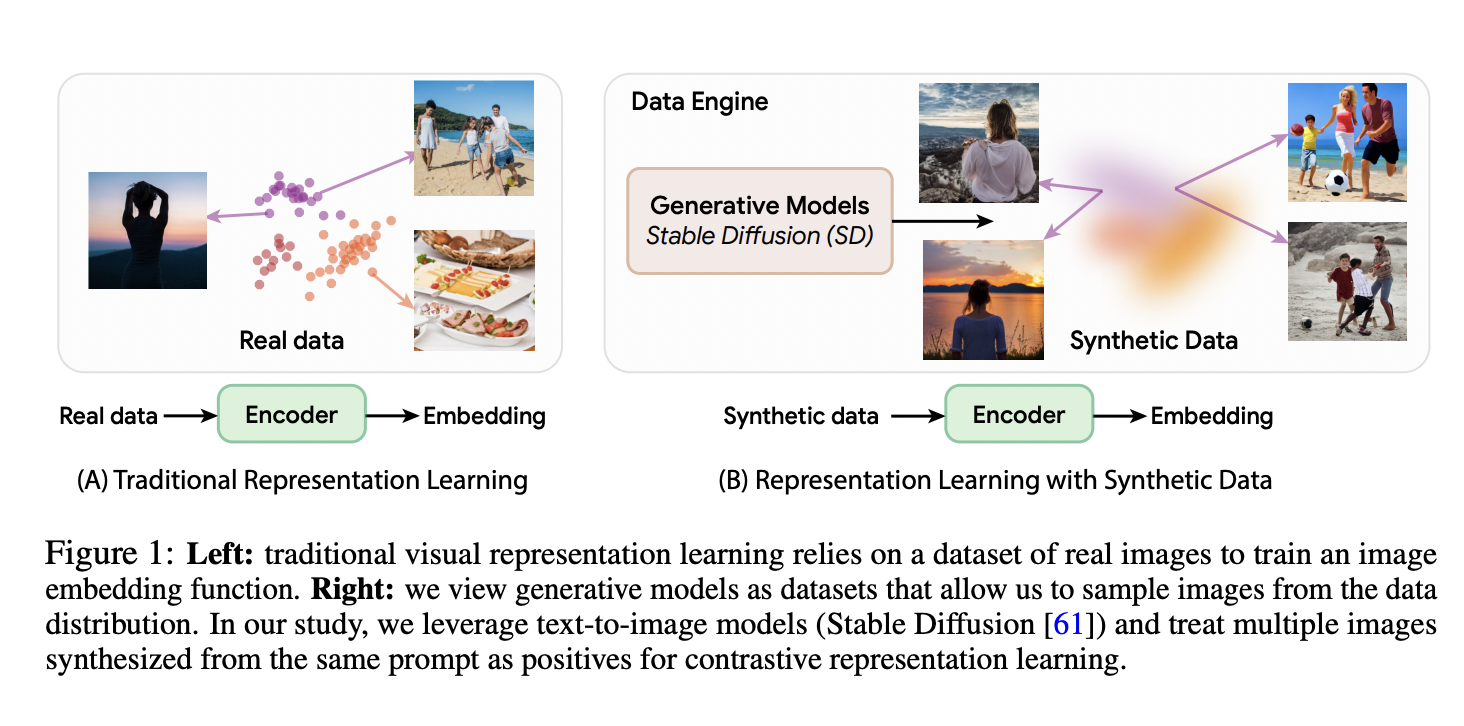

Researchers have explored the potential of using synthetic images generated by text-to-image models to learn visual representations and pave the way for more efficient and bias-reduced machine learning. This new study from MIT researchers focuses on Stable Diffusion and demonstrates that training self-supervised methods on synthetic images can match or even surpass the performance of their real image counterparts when the generative model is properly configured. The proposed approach, named StableRep, introduces a multi-positive contrastive learning method by treating multiple images generated from the same text prompt as positives for each other. StableRep is trained solely on synthetic images and outperforms state-of-the-art methods such as SimCLR and CLIP on large-scale datasets, even achieving better accuracy than CLIP trained with 50 million real images when coupled with language supervision.

The proposed StableRep approach introduces a novel method for representation learning by promoting intra-caption invariance. By considering multiple images generated from the same text prompt as positives for each other, StableRep employs a multi-positive contrastive loss. The results show that StableRep achieves remarkable linear accuracy on ImageNet, surpassing other self-supervised methods like SimCLR and CLIP. The approach’s success is attributed to the ability to exert greater control over sampling in synthetic data, leveraging factors such as the guidance scale in Stable Diffusion and text prompts. Additionally, generative models have the potential to generalize beyond their training data, providing a richer synthetic training set compared to real data alone.

In conclusion, the research demonstrates the surprising effectiveness of training self-supervised methods on synthetic images generated by Stable Diffusion. The StableRep approach, with its multi-positive contrastive learning method, showcases superior performance in representation learning compared to state-of-the-art methods using real images. The study opens up possibilities for simplifying data collection through text-to-image generative models, presenting a cost-effective alternative to acquiring large and diverse datasets. However, challenges such as semantic mismatch and biases in synthetic data must be addressed, and the potential impact of using uncurated web data for training generative models should be considered.

REA|REA|REA|REA|REA|REA|REA|REA

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is currently pursuing her B.Tech from the Indian Institute of Technology(IIT), Kharagpur. She is a tech enthusiast and has a keen interest in the scope of software and data science applications. She is always reading about the developments in different field of AI and ML.