Neural Radiance Fields (NeRF) is a powerful neural network-based technique for capturing 3D scenes and objects from 2D images or sparse 3D data.NeRF employs a neural network architecture consisting of two main components: the “NeRF in” and the “NeRF out” network. The “NeRF in” network inputs the 2D coordinates of a pixel and the associated camera pose, producing a feature vector. The “NeRF out” network takes this feature vector as input and predicts the 3D position and color information of the corresponding 3D point.

To create a NeRF-based human representation, you typically start by capturing images or videos of a human subject from multiple viewpoints. These images can be obtained from cameras, depth sensors, or other 3D scanning devices. NeRF-based human representations have several potential applications, including virtual avatars for games and virtual reality, 3D modeling for animation and film production, and medical imaging for creating 3D models of patients for diagnosis and treatment planning. However, it can be computationally intensive and require substantial training data.

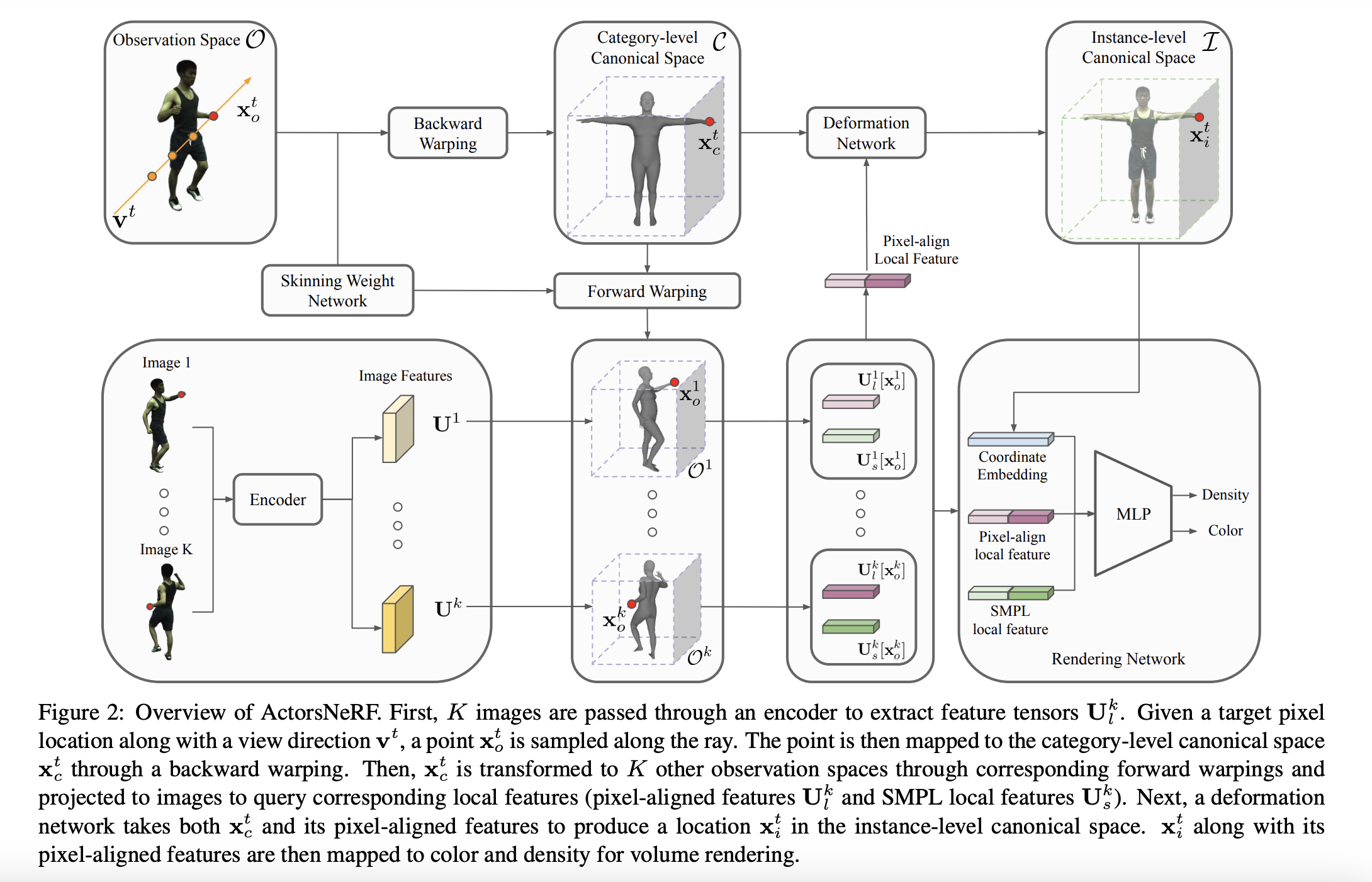

It requires a combination of synchronized multi-view videos and an instance-level NeRF network trained on a specific human video sequence. Researchers propose a new representation method called ActorsNeRF. It is a category-level human actor NeRF model that generalizes to unseen actors in a few-shot setting. With only a few images, e.g., 30 frames, sampled from a monocular video, ActorsNeRF synthesizes high-quality novel views of novel actors in the AIST++ dataset with unseen poses.

Researchers follow the method of 2-level canonical space, where for a given body pose and a rendering viewpoint, a sampled point in 3D space is first transformed into a canonical space by linear blend skinning, where the skinning weights are generated by skinning weight network that is shared across various subjects. Skinning weight controls how a 3D mesh representing a character deforms when it’s animated. Skinning weight networks are crucial for achieving realistic character movements and deformations in 3D computer graphics.

To achieve generalization across different individuals, the researchers trained the category-level NeRF model on a diverse set of subjects. During the inference phase, they fine-tuned the pretrained category-level NeRF model using only a few images of the target actor. They enabled the model to adapt to the specific characteristics of the actors.

Researchers find that ActorsNeRF outperforms the HumanNeRF approach significantly, and it maintains a valid shape for the less unobserved body parts compared to the HUmanNeRF system. ActorsNeRF can leverage the category level before smoothly synthesizing unobserved portions of the body. When ActorsNeRF is tested on multiple benchmarks like ZJU-MoCap and AIST++ datasets, it outperforms novel human actors with unseen poses across multiple few-shot settings.

Check out the Paper and Project Page. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on WhatsApp. Join our AI Channel on Whatsapp..

Arshad is an intern at MarktechPost. He is currently pursuing his Int. MSc Physics from the Indian Institute of Technology Kharagpur. Understanding things to the fundamental level leads to new discoveries which lead to advancement in technology. He is passionate about understanding the nature fundamentally with the help of tools like mathematical models, ML models and AI.