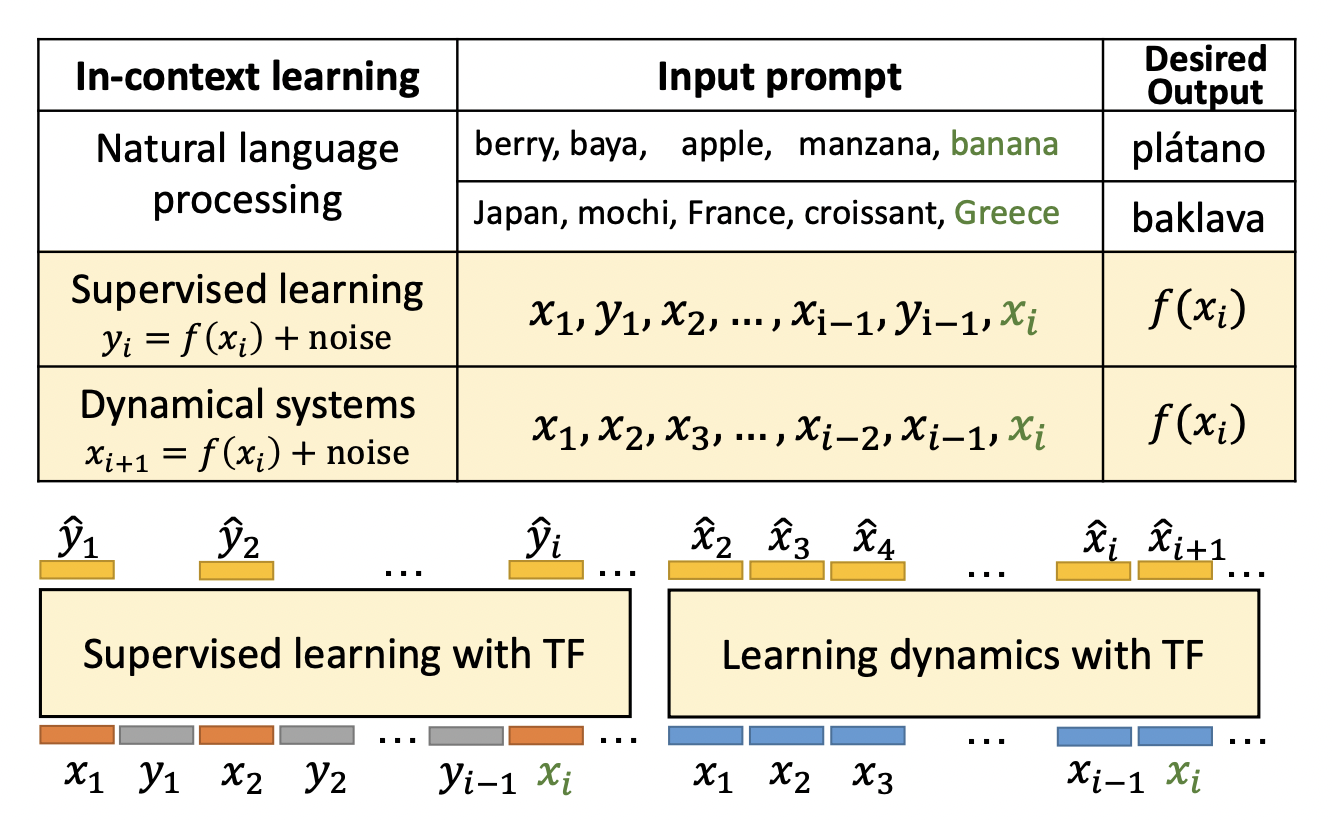

A New Artificial Intelligence (AI) Research Approach Presents Prompt-Based In-Context Learning As An Algorithm Learning Problem From A Statistical Perspective

In-context learning is a recent paradigm where a large language model (LLM) observes a test instance and a few training examples as its input and directly decodes the output without any update to its parameters. This implicit training contrasts with the usual training where the weights are changed based on the examples. Here comes the…