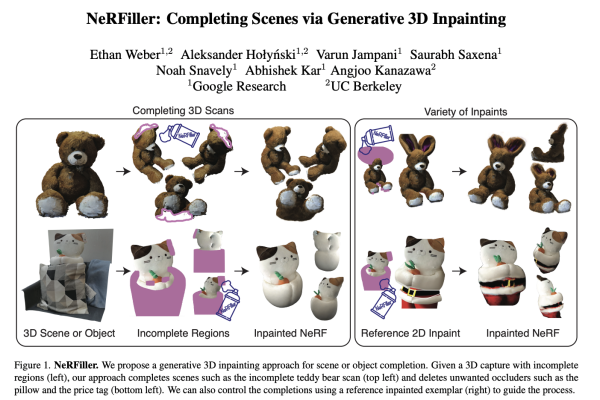

This AI Paper from Google and UC Berkeley Introduces NeRFiller: An Artificial Intelligence Approach that Revolutionizes 3D Scene Reconstruction Using 2D Inpainting Diffusion Models

How can missing portions of a 3D capture be effectively completed? This research paper from Google Research and UC Berkeley introduces “NeRFiller,” a novel approach for 3D inpainting, which addresses the challenge of reconstructing incomplete 3D scenes or objects often missing due to reconstruction failures or lack of observations. This approach allows precise and…